Unlock the Full Value of FinOps

By enabling safe, continuous optimization under clear policies and guardrails

November 21, 2025

November 21, 2025

November 21, 2025

November 21, 2025

This blog provides essential strategies for optimizing Google Dataflow for high availability and performance. It covers key topics like Dataflow architecture, performance optimization techniques, and high availability planning. The post also explores advanced tools such as Dataflow Templates and Flexible Resource Scheduling (FlexRS), along with real-world case studies. Whether you're looking to improve efficiency or ensure reliability, this guide offers actionable insights for optimizing your Dataflow pipelines.

Are your Google Dataflow pipelines running at peak efficiency?

Nowadays, where every millisecond counts, ensuring high availability and optimal performance for your data processing pipelines is not just a luxury it’s a necessity. With Google Dataflow, businesses can process vast amounts of data in real-time or in batch mode, but the real power comes when these pipelines are optimized for both cost and performance.

In this guide, we’ll dive into the best strategies to optimize Google Dataflow for high availability and performance so your enterprise can achieve reliable, fast data processing while minimizing costs. Whether you’re dealing with streaming data or batch workloads, the right optimizations can drastically improve pipeline performance, reduce downtime, and lower costs, leading to quicker insights and more reliable business operations.

Let’s explore the architecture, performance strategies, monitoring tools, and case studies that will help you unlock the full potential of your Dataflow pipelines.

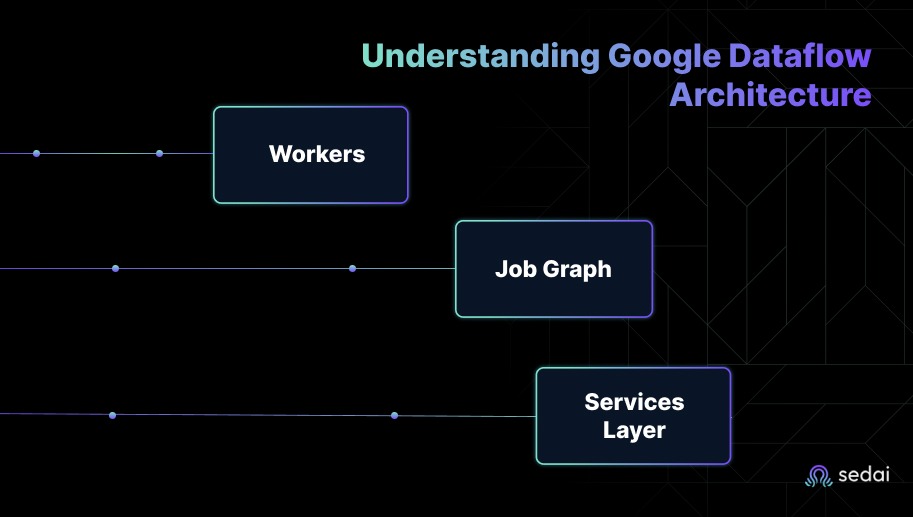

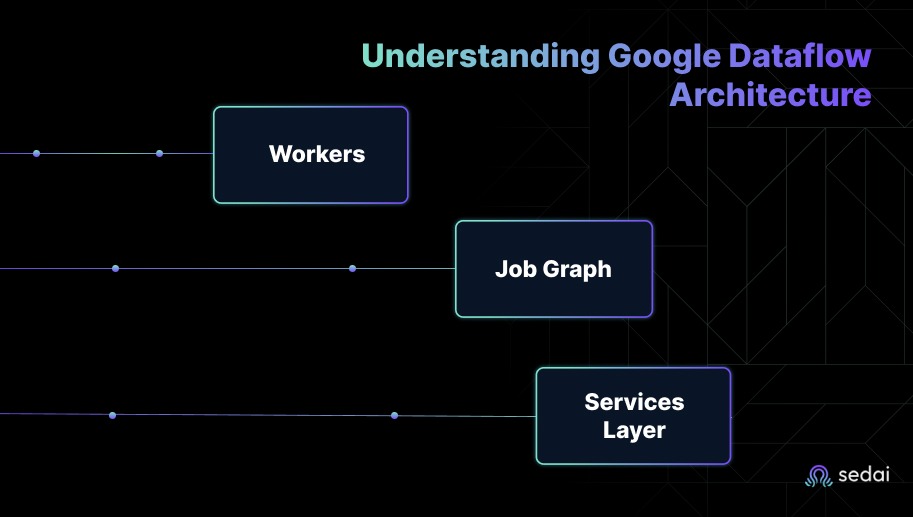

Before diving into optimization tactics, it’s important to understand how Google Dataflow fundamentally operates. A strong grasp of the architecture lays the foundation for making smarter design and scaling decisions.

At its core, Dataflow’s architecture consists of several critical components:

One key strength of Dataflow lies in how it abstracts resource management. It automatically schedules and scales work without requiring users to manually intervene, but this also means that understanding internal mechanisms—like how fusions occur or how parallelism is handled—is crucial for fine-tuning performance and resilience.

Sedai, a leading autonomous cloud optimization platform, emphasizes the importance of understanding service architectures like Dataflow to design proactive scaling and monitoring strategies that prevent bottlenecks before they occur.

By appreciating these core elements early on, you can tailor your optimizations more precisely as we move forward.

Once you understand the architecture, the next logical step is ensuring your pipelines are always available, even during failures or sudden workload spikes. High availability must be deliberately planned into your Dataflow jobs.

Here’s how to build resilience:

Organizations like Sedai advocate for an automated, observability-driven approach where availability risks are dynamically detected and self-healed, minimizing human effort and downtime.

Building high availability into your design ensures that your business operations remain uninterrupted, even in challenging cloud conditions.

With availability under control, let’s tackle performance—a key pillar in optimizing Google Dataflow. Poorly optimized pipelines can inflate costs, cause unnecessary delays, and undermine user experience.

Essential strategies include:

Sedai consistently reminds teams that small design tweaks—like a more efficient combiner or smarter windowing—can slash Dataflow costs and runtime without needing expensive hardware upgrades.

Optimizing these elements ensures your pipelines are streamlined, efficient, and ready to handle complex, large-scale data operations.

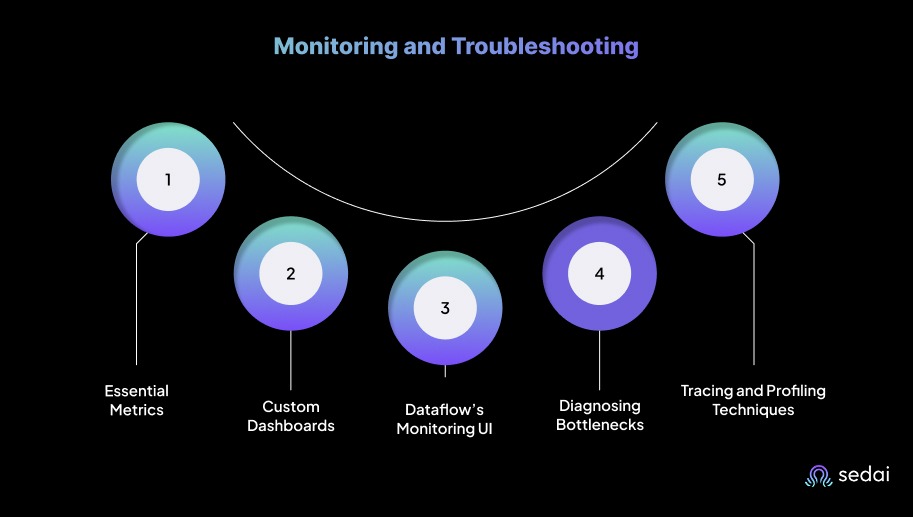

Even well-designed pipelines need continuous vigilance to maintain peak performance. Monitoring and quick troubleshooting are key to a healthy Dataflow environment.

Here’s how you can stay on top:

Sedai’s intelligent cloud operations platforms utilize automated monitoring and anomaly detection, empowering organizations to find and fix data flow inefficiencies much faster than manual methods.

Solid monitoring practices mean you can proactively fix problems—rather than reactively firefight when customers are already impacted.

Once your pipelines are stable and fast, it's time to push performance boundaries even further using advanced tactics.

Some powerful techniques include:

Forward-looking organizations, including Sedai’s customers, often incorporate these techniques to future-proof their pipelines against sudden traffic surges, regulatory demands, or shifting cost constraints.

Also read: Cloud Optimization: The Ultimate Guide for Engineers

Advanced optimization ensures your system doesn’t just perform well today—but continues to scale seamlessly tomorrow.

Theory becomes much more powerful when paired with real-world experience. Let’s look at two examples where optimizing Google Dataflow for high availability and performance yielded significant business outcomes.

Case Study 1: Streaming Pipeline Optimization

A financial services company processing billions of transactions needed real-time fraud detection with millisecond latency. By applying windowing strategies, tuning parallelism, and setting aggressive autoscaling parameters, they achieved:

Sedai’s autonomous optimization helped identify underutilized worker instances and recommend cost-saving configuration changes that had immediate ROI.

Case Study 2: Reliable Batch Processing at Scale

An e-commerce platform suffered frequent batch job failures during traffic spikes. By shifting to multi-regional deployments, implementing retry policies, and optimizing storage I/O, they:

These examples underscore that with the right optimization mindset—and the right partners like Sedai—you can turn Dataflow from a basic tool into a competitive advantage.

Optimization isn’t a one-time event. As business needs evolve, so must your pipelines.

Here’s how you can stay ahead:

Sedai’s continuous optimization approach enables organizations to automatically adjust configurations based on observed patterns, keeping pipelines future-ready with minimal manual intervention.

Being proactive about pipeline health and growth positions you to seize new opportunities rather than struggle to keep up.

Optimizing Google Dataflow for high availability and performance is critical for any organization serious about gaining timely, reliable insights from its data. By understanding Dataflow’s architecture, planning for availability, applying performance optimizations, and embracing continuous monitoring and refinement, you can achieve pipelines that not only meet today's demands but also scale effortlessly for tomorrow.

Tools like Sedai can supercharge your journey by providing intelligent optimization, proactive monitoring, and self-healing capabilities—freeing your team to focus more on innovation than operations.

Ready to take your Dataflow pipelines to the next level?

Start applying these best practices today and explore how automation platforms like Sedai can help you maximize your cloud investments!

Monitor key metrics like system lag, worker utilization, and throughput using Dataflow's Monitoring UI and custom dashboards.

Regional deployments run jobs within a single region, while multi-regional deployments provide redundancy across multiple regions for higher availability.

Sedai uses AI to dynamically adjust worker resources, autoscaling policies, and monitoring thresholds based on real-time behavior, maximizing both performance and cost-efficiency.

Combiners reduce the amount of data transferred between steps, minimizing shuffle costs and speeding up execution.

Flexible Resource Scheduling (FlexRS) is ideal for batch jobs that are time-flexible, allowing you to save costs by letting Google Cloud delay job start times slightly in exchange for discounted resources.

November 21, 2025

November 21, 2025

This blog provides essential strategies for optimizing Google Dataflow for high availability and performance. It covers key topics like Dataflow architecture, performance optimization techniques, and high availability planning. The post also explores advanced tools such as Dataflow Templates and Flexible Resource Scheduling (FlexRS), along with real-world case studies. Whether you're looking to improve efficiency or ensure reliability, this guide offers actionable insights for optimizing your Dataflow pipelines.

Are your Google Dataflow pipelines running at peak efficiency?

Nowadays, where every millisecond counts, ensuring high availability and optimal performance for your data processing pipelines is not just a luxury it’s a necessity. With Google Dataflow, businesses can process vast amounts of data in real-time or in batch mode, but the real power comes when these pipelines are optimized for both cost and performance.

In this guide, we’ll dive into the best strategies to optimize Google Dataflow for high availability and performance so your enterprise can achieve reliable, fast data processing while minimizing costs. Whether you’re dealing with streaming data or batch workloads, the right optimizations can drastically improve pipeline performance, reduce downtime, and lower costs, leading to quicker insights and more reliable business operations.

Let’s explore the architecture, performance strategies, monitoring tools, and case studies that will help you unlock the full potential of your Dataflow pipelines.

Before diving into optimization tactics, it’s important to understand how Google Dataflow fundamentally operates. A strong grasp of the architecture lays the foundation for making smarter design and scaling decisions.

At its core, Dataflow’s architecture consists of several critical components:

One key strength of Dataflow lies in how it abstracts resource management. It automatically schedules and scales work without requiring users to manually intervene, but this also means that understanding internal mechanisms—like how fusions occur or how parallelism is handled—is crucial for fine-tuning performance and resilience.

Sedai, a leading autonomous cloud optimization platform, emphasizes the importance of understanding service architectures like Dataflow to design proactive scaling and monitoring strategies that prevent bottlenecks before they occur.

By appreciating these core elements early on, you can tailor your optimizations more precisely as we move forward.

Once you understand the architecture, the next logical step is ensuring your pipelines are always available, even during failures or sudden workload spikes. High availability must be deliberately planned into your Dataflow jobs.

Here’s how to build resilience:

Organizations like Sedai advocate for an automated, observability-driven approach where availability risks are dynamically detected and self-healed, minimizing human effort and downtime.

Building high availability into your design ensures that your business operations remain uninterrupted, even in challenging cloud conditions.

With availability under control, let’s tackle performance—a key pillar in optimizing Google Dataflow. Poorly optimized pipelines can inflate costs, cause unnecessary delays, and undermine user experience.

Essential strategies include:

Sedai consistently reminds teams that small design tweaks—like a more efficient combiner or smarter windowing—can slash Dataflow costs and runtime without needing expensive hardware upgrades.

Optimizing these elements ensures your pipelines are streamlined, efficient, and ready to handle complex, large-scale data operations.

Even well-designed pipelines need continuous vigilance to maintain peak performance. Monitoring and quick troubleshooting are key to a healthy Dataflow environment.

Here’s how you can stay on top:

Sedai’s intelligent cloud operations platforms utilize automated monitoring and anomaly detection, empowering organizations to find and fix data flow inefficiencies much faster than manual methods.

Solid monitoring practices mean you can proactively fix problems—rather than reactively firefight when customers are already impacted.

Once your pipelines are stable and fast, it's time to push performance boundaries even further using advanced tactics.

Some powerful techniques include:

Forward-looking organizations, including Sedai’s customers, often incorporate these techniques to future-proof their pipelines against sudden traffic surges, regulatory demands, or shifting cost constraints.

Also read: Cloud Optimization: The Ultimate Guide for Engineers

Advanced optimization ensures your system doesn’t just perform well today—but continues to scale seamlessly tomorrow.

Theory becomes much more powerful when paired with real-world experience. Let’s look at two examples where optimizing Google Dataflow for high availability and performance yielded significant business outcomes.

Case Study 1: Streaming Pipeline Optimization

A financial services company processing billions of transactions needed real-time fraud detection with millisecond latency. By applying windowing strategies, tuning parallelism, and setting aggressive autoscaling parameters, they achieved:

Sedai’s autonomous optimization helped identify underutilized worker instances and recommend cost-saving configuration changes that had immediate ROI.

Case Study 2: Reliable Batch Processing at Scale

An e-commerce platform suffered frequent batch job failures during traffic spikes. By shifting to multi-regional deployments, implementing retry policies, and optimizing storage I/O, they:

These examples underscore that with the right optimization mindset—and the right partners like Sedai—you can turn Dataflow from a basic tool into a competitive advantage.

Optimization isn’t a one-time event. As business needs evolve, so must your pipelines.

Here’s how you can stay ahead:

Sedai’s continuous optimization approach enables organizations to automatically adjust configurations based on observed patterns, keeping pipelines future-ready with minimal manual intervention.

Being proactive about pipeline health and growth positions you to seize new opportunities rather than struggle to keep up.

Optimizing Google Dataflow for high availability and performance is critical for any organization serious about gaining timely, reliable insights from its data. By understanding Dataflow’s architecture, planning for availability, applying performance optimizations, and embracing continuous monitoring and refinement, you can achieve pipelines that not only meet today's demands but also scale effortlessly for tomorrow.

Tools like Sedai can supercharge your journey by providing intelligent optimization, proactive monitoring, and self-healing capabilities—freeing your team to focus more on innovation than operations.

Ready to take your Dataflow pipelines to the next level?

Start applying these best practices today and explore how automation platforms like Sedai can help you maximize your cloud investments!

Monitor key metrics like system lag, worker utilization, and throughput using Dataflow's Monitoring UI and custom dashboards.

Regional deployments run jobs within a single region, while multi-regional deployments provide redundancy across multiple regions for higher availability.

Sedai uses AI to dynamically adjust worker resources, autoscaling policies, and monitoring thresholds based on real-time behavior, maximizing both performance and cost-efficiency.

Combiners reduce the amount of data transferred between steps, minimizing shuffle costs and speeding up execution.

Flexible Resource Scheduling (FlexRS) is ideal for batch jobs that are time-flexible, allowing you to save costs by letting Google Cloud delay job start times slightly in exchange for discounted resources.