Unlock the Full Value of FinOps

By enabling safe, continuous optimization under clear policies and guardrails

November 21, 2025

November 20, 2025

November 21, 2025

November 20, 2025

AWS Graviton processors deliver strong performance-per-dollar value for general-purpose, compute-intensive, and memory-heavy workloads. This guide explores the evolution from Graviton2 to Graviton4, breaks down instance types, and explains when AWS Graviton is a smart choice. It also covers pricing, purchasing models, and migration considerations. Platforms like Sedai help teams unlock further value by automating performance optimization based on real workload data, without adding engineering overhead.

Cloud workloads are under constant pressure to do more with less. More traffic, tighter budgets, and faster response times are the new baseline. But many teams are still running on overprovisioned or outdated x86 infrastructure that adds cost without adding value.

In this guide, we’ll explore what AWS Graviton is, why it was built, and when it makes practical sense to adopt it. You’ll also see how Sedai helps teams make informed, automated decisions about using Graviton based on actual performance and cost data.

AWS Graviton was created because traditional x86 chips weren’t keeping up with cloud-native demands. Scaling often meant overpaying for unused compute just to hit performance targets. The architecture wasn’t built with cloud efficiency in mind.

By building its own ARM-based processors, AWS gained tighter control over performance and cost. Graviton lets teams right-size workloads without relying on brute-force provisioning. It’s a shift from legacy compute to something purpose-built for the cloud.

Next, let’s explore exactly what AWS Graviton is and how it drives those performance gains.

AWS Graviton isn’t winning because it’s trendy. It’s winning because it works, especially for teams trying to optimize spend without trading off performance.

Here’s what teams are getting out of the switch:

The result? You get compute that’s faster, cheaper, and built for how modern engineering teams actually deploy software.

After introducing its own custom silicon, AWS has steadily evolved the AWS Graviton processor family through three major production-ready generations: Graviton2, Graviton3, and Graviton4. Each leap brings improvements in performance, efficiency, and architecture support.

These AWS Graviton-based processors back a range of EC2 instance families, each tailored to different workload profiles:

All AWS Graviton instances are built on the Nitro system, with support for features like EBS optimization, enhanced networking, and Elastic Fabric Adapter (EFA) in select types. These are not entry-level chips, they’re engineered to run production-grade systems at scale.

Knowing which generation and instance type to start with is key to unlocking AWS Graviton’s full cost-performance advantage, especially if you're tuning for compute, memory, or I/O-specific gains.

Migrating to AWS Graviton isn’t a drop-in replacement for every workload, but for most Linux-based applications, it’s surprisingly smooth. The key friction points typically surface when your stack includes architecture-specific binaries or unmanaged dependencies.

Here’s what usually works without issue:

What needs more attention:

A few practical tips:

Not every workload is a slam dunk for AWS Graviton. But if you’re running cloud-native apps with some flexibility in your stack, you’re likely leaving performance (and money) on the table by not considering it.

Graviton gives you room to optimize, but it's not about flipping a switch blindly. If your environment is flexible and your workloads are compute-bound, it's a clear win. If you're locked into rigid tooling or OS limitations, it's probably not worth the effort yet.

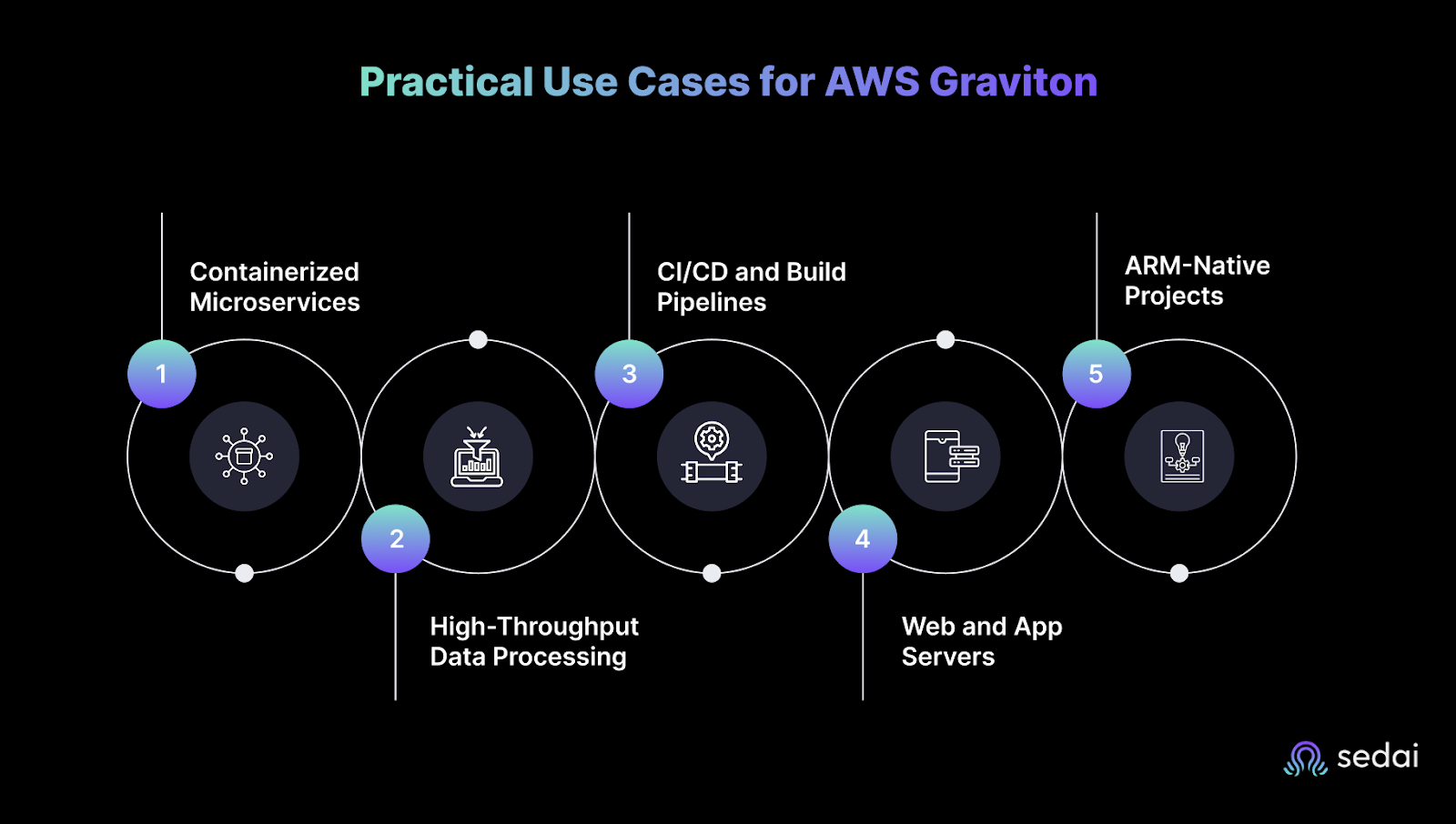

Once you've cleared compatibility and control hurdles, the next question is what exactly should you run on AWS Graviton? The short answer: anything compute-heavy, scalable, and flexible.

Here’s where teams are seeing real performance and cost benefits:

Whether you’re running on ECS, EKS, or Kubernetes-on-EC2, containerized workloads are quick wins for Graviton. ARM64 support is baked into Docker, and with multi-arch builds, most services don’t require major rewrites.

Best for:

Big data workloads, especially those using Spark, Flink, Kafka, or ClickHouse, see significant gains from Graviton’s enhanced memory bandwidth and better performance per watt.

Best for:

Graviton instances make solid runners for fast, cost-efficient builds, especially for projects already targeting ARM (mobile, edge, or containerized deployments). Some teams run ARM-native test jobs in parallel with x86 to compare runtime behavior.

Best for:

Traditional web applications like Nginx, Node.js, Spring Boot, or Django transition well to Graviton, especially if you’re already containerized or running on AL2/Ubuntu.

Best for:

If you’re building for edge devices, mobile hardware, or IoT gateways, Graviton helps maintain consistent performance characteristics between dev, test, and production environments.

Best for:

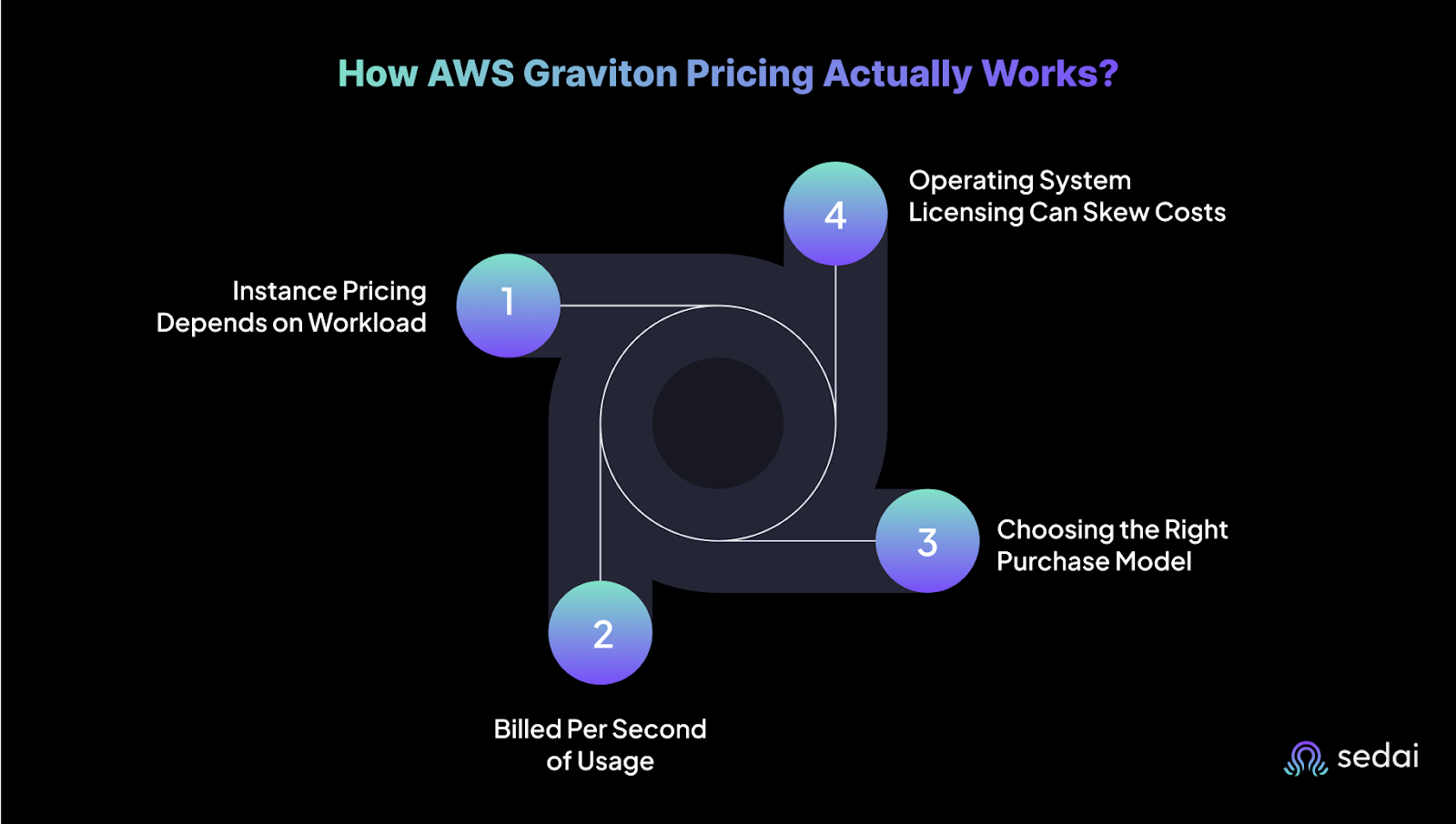

Graviton instances are known for being cost-effective, but savings only materialize when pricing choices align with workload demands and usage patterns.

Here’s what engineers should keep in mind:

Each Graviton instance type is optimized for different workload profiles:

Pricing scales with instance size (for example, c6g.medium to c6g.16xlarge) and also varies by region. A configuration that is affordable in North Virginia might cost significantly more in Singapore.

You are billed per second with a 60-second minimum. This model is efficient for workloads that are bursty, short-lived, or event-driven, such as CI pipelines, auto-scaling APIs, or development environments.

There are three common ways to pay:

Many teams mix these models to optimize for both flexibility and cost control across dev, staging, and production.

Graviton delivers the best value when paired with Linux or open-source operating systems. Running Windows workloads introduces additional licensing fees, which can erode the cost advantage. If you're planning a large migration, it's important to align OS choices with cost targets early on.

Also read: Top 10 AWS Cost Optimization Tools

Many teams have made the switch to AWS Graviton for better performance and cost efficiency, but managing those gains over time is where things get challenging. Instance choices, workload patterns, and scaling demands can shift quickly, and without the right visibility, teams risk underutilizing the very advantages they moved for.

That’s why more companies are turning to platforms like Sedai. These tools help automate workload tuning, identify cost-performance gaps, and continuously adapt Graviton usage based on real-time behavior. It’s not about replacing engineers, it’s about giving them the insight and automation needed to make smarter, faster decisions at scale.

Also read: Cloud Optimization: The Ultimate Guide for Engineers

Graviton has come a long way from being a niche alternative to x86. With stronger performance across generations, tailored instance types, and lower costs, it’s now a serious choice for modern cloud workloads.

But migrating is only part of the equation. To truly get value from AWS Graviton, teams need to continually tune for performance and efficiency, especially as environments grow more complex. Platforms like Sedai help automate that effort, so engineers can focus on building rather than chasing down performance issues.

Curious how Sedai could fit into your AWS Graviton setup? Take a closer look at how it works.

Graviton is ideal for compute-intensive, memory-optimized, and burstable workloads, like microservices, databases, and machine learning inference.

Not directly. You’ll need to recompile or re-architect for Arm architecture. The cost savings can justify the effort.

Graviton is supported across EC2, ECS, EKS, RDS, Aurora, Lambda, ElastiCache, EMR, and more.

Sedai analyzes your workload’s real-time behavior and automatically shifts to optimal instance types, Graviton included, for better cost and performance.

Graviton offers better performance per dollar, but results vary based on workload characteristics. Continuous evaluation is key, which Sedai automates.

November 20, 2025

November 21, 2025

AWS Graviton processors deliver strong performance-per-dollar value for general-purpose, compute-intensive, and memory-heavy workloads. This guide explores the evolution from Graviton2 to Graviton4, breaks down instance types, and explains when AWS Graviton is a smart choice. It also covers pricing, purchasing models, and migration considerations. Platforms like Sedai help teams unlock further value by automating performance optimization based on real workload data, without adding engineering overhead.

Cloud workloads are under constant pressure to do more with less. More traffic, tighter budgets, and faster response times are the new baseline. But many teams are still running on overprovisioned or outdated x86 infrastructure that adds cost without adding value.

In this guide, we’ll explore what AWS Graviton is, why it was built, and when it makes practical sense to adopt it. You’ll also see how Sedai helps teams make informed, automated decisions about using Graviton based on actual performance and cost data.

AWS Graviton was created because traditional x86 chips weren’t keeping up with cloud-native demands. Scaling often meant overpaying for unused compute just to hit performance targets. The architecture wasn’t built with cloud efficiency in mind.

By building its own ARM-based processors, AWS gained tighter control over performance and cost. Graviton lets teams right-size workloads without relying on brute-force provisioning. It’s a shift from legacy compute to something purpose-built for the cloud.

Next, let’s explore exactly what AWS Graviton is and how it drives those performance gains.

AWS Graviton isn’t winning because it’s trendy. It’s winning because it works, especially for teams trying to optimize spend without trading off performance.

Here’s what teams are getting out of the switch:

The result? You get compute that’s faster, cheaper, and built for how modern engineering teams actually deploy software.

After introducing its own custom silicon, AWS has steadily evolved the AWS Graviton processor family through three major production-ready generations: Graviton2, Graviton3, and Graviton4. Each leap brings improvements in performance, efficiency, and architecture support.

These AWS Graviton-based processors back a range of EC2 instance families, each tailored to different workload profiles:

All AWS Graviton instances are built on the Nitro system, with support for features like EBS optimization, enhanced networking, and Elastic Fabric Adapter (EFA) in select types. These are not entry-level chips, they’re engineered to run production-grade systems at scale.

Knowing which generation and instance type to start with is key to unlocking AWS Graviton’s full cost-performance advantage, especially if you're tuning for compute, memory, or I/O-specific gains.

Migrating to AWS Graviton isn’t a drop-in replacement for every workload, but for most Linux-based applications, it’s surprisingly smooth. The key friction points typically surface when your stack includes architecture-specific binaries or unmanaged dependencies.

Here’s what usually works without issue:

What needs more attention:

A few practical tips:

Not every workload is a slam dunk for AWS Graviton. But if you’re running cloud-native apps with some flexibility in your stack, you’re likely leaving performance (and money) on the table by not considering it.

Graviton gives you room to optimize, but it's not about flipping a switch blindly. If your environment is flexible and your workloads are compute-bound, it's a clear win. If you're locked into rigid tooling or OS limitations, it's probably not worth the effort yet.

Once you've cleared compatibility and control hurdles, the next question is what exactly should you run on AWS Graviton? The short answer: anything compute-heavy, scalable, and flexible.

Here’s where teams are seeing real performance and cost benefits:

Whether you’re running on ECS, EKS, or Kubernetes-on-EC2, containerized workloads are quick wins for Graviton. ARM64 support is baked into Docker, and with multi-arch builds, most services don’t require major rewrites.

Best for:

Big data workloads, especially those using Spark, Flink, Kafka, or ClickHouse, see significant gains from Graviton’s enhanced memory bandwidth and better performance per watt.

Best for:

Graviton instances make solid runners for fast, cost-efficient builds, especially for projects already targeting ARM (mobile, edge, or containerized deployments). Some teams run ARM-native test jobs in parallel with x86 to compare runtime behavior.

Best for:

Traditional web applications like Nginx, Node.js, Spring Boot, or Django transition well to Graviton, especially if you’re already containerized or running on AL2/Ubuntu.

Best for:

If you’re building for edge devices, mobile hardware, or IoT gateways, Graviton helps maintain consistent performance characteristics between dev, test, and production environments.

Best for:

Graviton instances are known for being cost-effective, but savings only materialize when pricing choices align with workload demands and usage patterns.

Here’s what engineers should keep in mind:

Each Graviton instance type is optimized for different workload profiles:

Pricing scales with instance size (for example, c6g.medium to c6g.16xlarge) and also varies by region. A configuration that is affordable in North Virginia might cost significantly more in Singapore.

You are billed per second with a 60-second minimum. This model is efficient for workloads that are bursty, short-lived, or event-driven, such as CI pipelines, auto-scaling APIs, or development environments.

There are three common ways to pay:

Many teams mix these models to optimize for both flexibility and cost control across dev, staging, and production.

Graviton delivers the best value when paired with Linux or open-source operating systems. Running Windows workloads introduces additional licensing fees, which can erode the cost advantage. If you're planning a large migration, it's important to align OS choices with cost targets early on.

Also read: Top 10 AWS Cost Optimization Tools

Many teams have made the switch to AWS Graviton for better performance and cost efficiency, but managing those gains over time is where things get challenging. Instance choices, workload patterns, and scaling demands can shift quickly, and without the right visibility, teams risk underutilizing the very advantages they moved for.

That’s why more companies are turning to platforms like Sedai. These tools help automate workload tuning, identify cost-performance gaps, and continuously adapt Graviton usage based on real-time behavior. It’s not about replacing engineers, it’s about giving them the insight and automation needed to make smarter, faster decisions at scale.

Also read: Cloud Optimization: The Ultimate Guide for Engineers

Graviton has come a long way from being a niche alternative to x86. With stronger performance across generations, tailored instance types, and lower costs, it’s now a serious choice for modern cloud workloads.

But migrating is only part of the equation. To truly get value from AWS Graviton, teams need to continually tune for performance and efficiency, especially as environments grow more complex. Platforms like Sedai help automate that effort, so engineers can focus on building rather than chasing down performance issues.

Curious how Sedai could fit into your AWS Graviton setup? Take a closer look at how it works.

Graviton is ideal for compute-intensive, memory-optimized, and burstable workloads, like microservices, databases, and machine learning inference.

Not directly. You’ll need to recompile or re-architect for Arm architecture. The cost savings can justify the effort.

Graviton is supported across EC2, ECS, EKS, RDS, Aurora, Lambda, ElastiCache, EMR, and more.

Sedai analyzes your workload’s real-time behavior and automatically shifts to optimal instance types, Graviton included, for better cost and performance.

Graviton offers better performance per dollar, but results vary based on workload characteristics. Continuous evaluation is key, which Sedai automates.