Unlock the Full Value of FinOps

By enabling safe, continuous optimization under clear policies and guardrails

November 21, 2025

November 20, 2025

November 21, 2025

November 20, 2025

This blog breaks down Google Dataflow pricing by highlighting key cost drivers like compute, shuffle, and disk usage. It shows you how to estimate and control costs using Google Cloud tools and how Sedai goes further with autonomous cost optimization.

Cloud data processing isn’t just about moving data from point A to point B; it’s about doing it fast, reliably, and without burning a hole in your budget. But with unpredictable usage patterns, long-running jobs, and scaling complexity, keeping costs in check often feels like shooting in the dark.

That’s where Google Dataflow steps in. It gives you a fully managed, autoscaling stream and batch processing service with detailed, per-second billing so you can process massive datasets efficiently and control costs at every stage.

Let’s break down what Google Dataflow is, when it makes sense to use it, and how it actually works.

You don’t have time to babysit data pipelines. When streaming jobs stall, batch jobs lag, or infrastructure spikes costs overnight, it’s more than a hiccup; it’s hours lost, SLAs missed, and on-call stress you didn’t need.

Google Dataflow is a fully managed stream and batch data processing service built to take that pressure off. It autoscales your resources, handles failures behind the scenes, and charges only for what you use, no pre-provisioning, no tuning guesswork. You write the pipeline logic, and it takes care of the rest.

Coming up: When to use Dataflow and how it actually works behind the scenes.

When you’re under pressure to process massive data volumes quickly, without babysitting infrastructure or blowing your budget, Google Dataflow gives you room to breathe. It’s a fully managed, no-ops stream and batch processing service that lets you focus on what matters: building reliable pipelines that scale with your data.

Here’s how it delivers across your most critical needs.

Google Dataflow shines when you’re dealing with high-throughput pipelines, real-time event streams, or complex batch jobs. It’s a go-to for use cases where data velocity, volume, and flexibility all matter at once.

Use Dataflow for:

No clusters, no manual scaling, no babysitting, just code, deploy, and move on.

Google Dataflow is built for modern data operations. You don’t have time for sluggish processing or rigid infrastructure, so it gives you:

It’s designed for the way you work, not the other way around.

At its core, Dataflow runs on Apache Beam’s unified model. You build a pipeline using Beam SDKs, and Dataflow handles the orchestration, scaling resources, optimizing execution, and maintaining fault tolerance.

Here’s the typical flow:

Whether it’s continuous data streams or periodic batch loads, Dataflow handles the heavy lifting behind the scenes.

Up next: let’s break down the core pricing components that actually drive your Google Dataflow costs.

If you're seeing unpredictable spikes in your Dataflow bills, it’s not random, it’s structural. Google Dataflow pricing breaks down into several moving parts, and understanding each one is key to keeping your cloud spend under control. Here's how your cost adds up across the pipeline.

Google Dataflow charges per second based on the actual compute power your jobs consume. That means:

This pricing structure is efficient, but only if your pipeline is properly tuned. Over-provisioned workers or idle processing? You’ll pay for that too.

Every worker uses disk space. You’re billed for persistent disks attached to workers, and you can choose between:

Choosing the right disk type depends on your pipeline’s workload, but poor alignment here often results in both overpayment and underperformance.

Data movement within your pipeline, often invisible during development, becomes very visible on your billing dashboard.

Designing pipelines that minimize shuffle and streamline data paths isn’t just good practice, it’s a cost-cutting strategy.

Dataflow Prime introduces DCUs (Data Compute Units), a bundled, simplified way to price compute, memory, and storage as one unit.

It’s streamlined, but not always cheaper, Prime works best when efficiency is prioritized over raw savings.

If you're processing sensitive or regulated data, you can run your pipeline on Confidential VMs, offering hardware-based memory encryption and isolated environments.

Unless security is a top priority, Confidential VMs can quietly increase your compute bill without adding much value.

Each component plays a role in how your final Dataflow bill is shaped. Next, let’s look at how Google’s pricing models and regional differences affect those numbers.

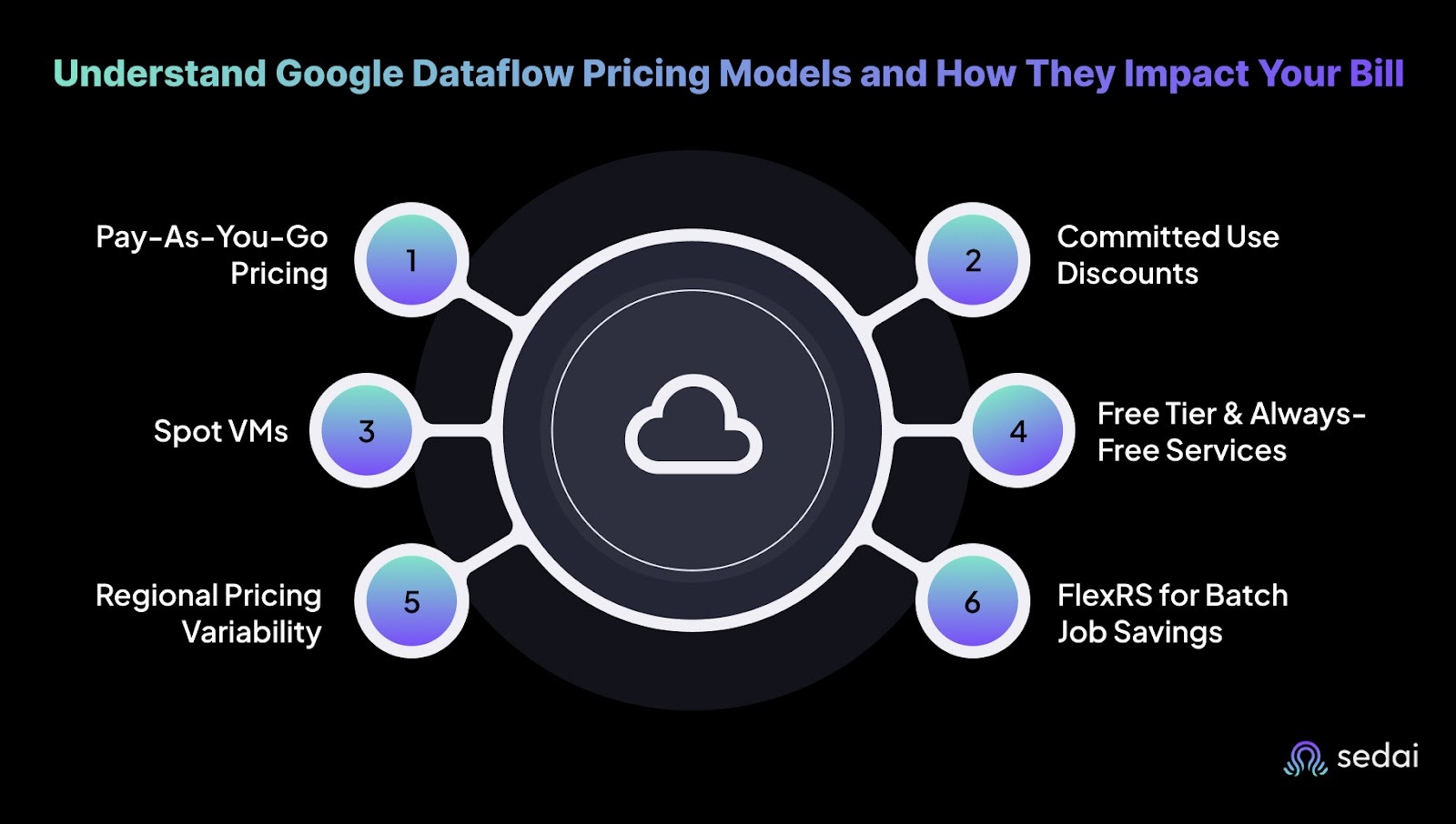

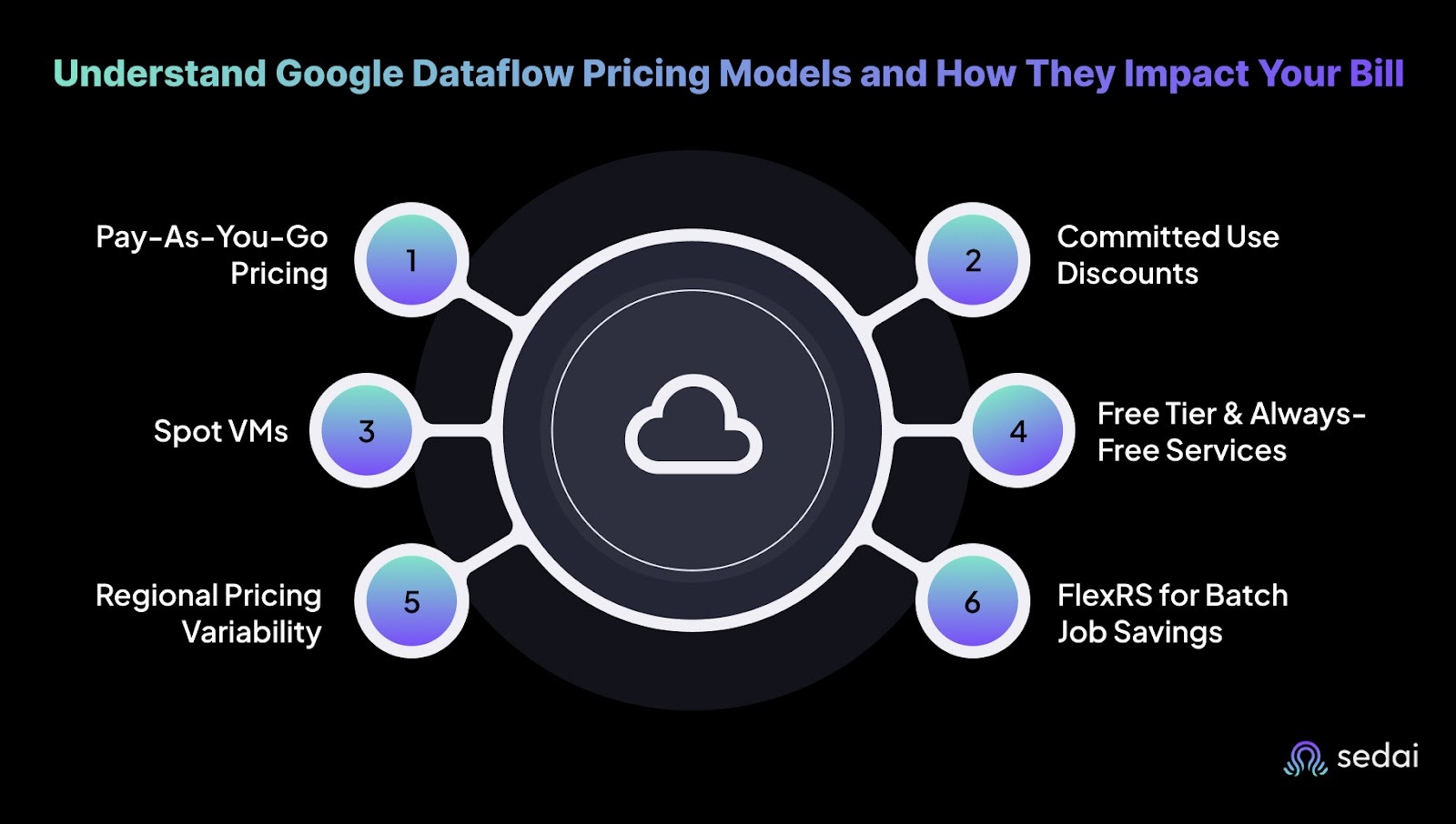

Predicting cloud costs is already hard. But when you’re running high-throughput data pipelines, one unexpected spike can leave you explaining a bill no one saw coming. Google Dataflow offers several pricing models and regional rates to help you stay in control, if you know how to use them.

Let’s break them down so you can choose what works best for your budget and performance needs.

If your workloads are unpredictable or seasonal, the pay-as-you-go model gives you full flexibility. You’re billed by the second for:

This model works well for teams still experimenting or scaling gradually. But the freedom comes at a cost, it’s the most expensive option on a per-hour basis.

When you know what compute resources you’ll need long-term, committed use discounts give you up to 70% off standard pricing. You commit to one- or three-year plans, covering:

This model is ideal for production pipelines with steady, predictable throughput. You pay less, and the savings compound over time.

Need to run batch jobs or flexible workloads at rock-bottom prices? Spot VMs offer 60–91% discounts, with the tradeoff that they can be terminated at any time. Great for:

Just don’t run anything mission-critical on them unless you’ve built for interruptions.

You can test the waters with Google Cloud’s free tier. It includes:

The “always free” services are great for early-stage development or evaluation. But the moment you go over the limits, you’re back on pay-as-you-go rates, so watch your usage closely.

Most Google Cloud services follow global pricing, but not all. Some services (like network egress or disk storage) may vary depending on the region. Picking the right region for your workloads can shave off real dollars without impacting performance.

If you’re processing batch pipelines that don’t need instant results, FlexRS (Flexible Resource Scheduling) helps reduce costs by using lower-priority resources with a delay tolerance. It’s a smart option when speed isn’t your #1 priority but budget is.

Next, we’ll walk through how to estimate your Google Dataflow costs using the console, CLI, and billing tools.

When Dataflow costs spike, it’s usually after the job runs, and by then, it’s too late. You need ways to estimate costs upfront, break them down by component, and track actual usage over time. Here's how to make sure you don’t get blindsided by your next pipeline run.

The Cost tab inside the Cloud Console gives you live cost visibility during and after a job run.

Best for: spotting issues in live or recently completed jobs.

Before your job runs, use the Google Cloud Pricing Calculator to model expected costs.

Best for: forecasting budget impact and planning capacity before deployment.

Want more control than the calculator? Use the gcloud CLI or build your own formulas based on GCEU pricing units.

Best for: custom estimates, power users, and teams running cost modeling at scale.

Estimates are good, but actuals are better. Export billing data to BigQuery for in-depth analysis.

Best for: large-scale cost visibility, finance tracking, and cost allocation by project or environment.

These tools help you move from guesswork to accurate cost forecasting before and after your Dataflow jobs run.

Also read: Cloud Automation to understand how autonomous systems like Sedai automate cost and performance management in real time.

You shouldn’t have to wait for a surprise bill to find out a single Dataflow pipeline quietly burned through thousands. Cost overruns in stream and batch processing happen fast, especially with autoscaling, retries, and long-running jobs. That’s why real-time monitoring and control aren’t optional, they’re critical.

Google Cloud Monitoring offers detailed insights into how your pipelines are performing, and what they’re costing, down to the hour.

You get real-time dashboards that break down:

You can slice this data by job name, region, resource type, or time window, whether you want a top-down view or detailed forensic analysis. This granularity is especially useful when you're trying to justify optimizations or answer a finance team that’s asking, “Why did costs double yesterday?”

Monitoring is only half the battle. Google Dataflow integrates with Cloud Monitoring to let you set cost or usage thresholds, so you’re not just watching costs, you’re actively managing them.

You can configure:

These alerts help you catch issues like inefficient code paths, oversized workers, or stuck streaming jobs before they run wild.

Want to dive deeper? Export billing data to BigQuery for advanced queries. This is perfect for:

You can also correlate logs from Dataflow jobs with resource metrics to pinpoint what caused a specific spike, whether it was a malformed input, a bug in user code, or an unoptimized join operation.

Monitoring isn’t just for visibility, it’s your first step toward true cost optimization. When paired with autoscaling, preemptible VMs, or autonomous platforms like Sedai, these signals become actionable triggers to automatically improve efficiency, without waiting for human intervention.

Real control comes from real-time data + automated action. That’s how you prevent waste, reduce risk, and keep cloud costs aligned with business goals.

Next up: Let’s look at the most effective cost optimization strategies you can use to lower your Google Dataflow costs, without sacrificing performance.

If you're running Google Dataflow pipelines without tuning for cost, you're likely burning money you don’t need to. Most teams overprovision by default, run jobs at peak times, and leave autoscaling and monitoring half-configured. That’s not sustainable. Here’s how to stop the silent drain and build pipelines that are smart, fast, and efficient, without wasting a cent.

Dataflow costs stack up fast when your pipelines are oversized or your resources aren’t matched to the job.

Sedai’s autonomous system goes a step further by analyzing real-time performance and cost data to rightsize your workers continuously, so you stop overpaying without lifting a finger.

Batch jobs don’t need instant results, they need to be cheap and efficient. FlexRS (Flexible Resource Scheduling) is built exactly for that.

Run non-urgent jobs overnight or during off-peak hours. It’s an easy win for teams running regular ETL jobs or model training that doesn’t need real-time results.

Data shuffle in Dataflow is like a silent budget killer, it spikes your costs without showing up until the bill hits.

Sedai helps you identify shuffle-heavy steps in your pipeline, then suggests or automatically applies restructuring options, saving both compute and money.

Don’t miss the savings built right into Google Cloud’s pricing models.

Take five minutes to review your billing dashboard, odds are you’re leaving 20–30% in discounts untouched.

The way you set up your job matters as much as how it's coded.

This is where Sedai really shines, it uses historical patterns, workload signatures, and real-time metrics to fine-tune configurations dynamically, so you’re not guessing your way to optimization.

Smart optimization isn’t about tweaking settings once and hoping for the best. It’s about building pipelines that evolve with your workloads.

Also read: Top Cloud Cost Optimization Tools in 2025

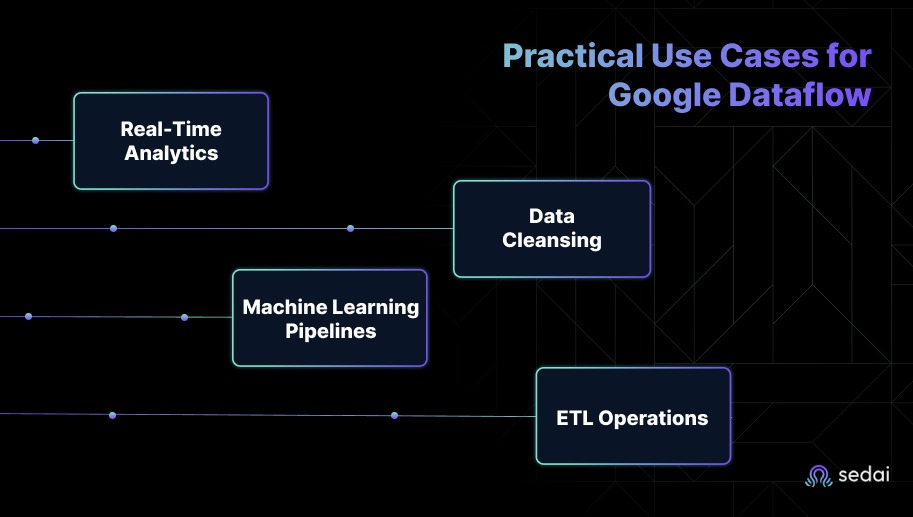

Google Dataflow isn’t just flexible, it’s built to handle real-world, high-impact scenarios at scale. Whether you’re cleaning messy datasets or running real-time streaming jobs, Dataflow gives you the tools to act fast, stay efficient, and stay in control of costs.

Real-Time Analytics and Streaming

When every second counts, batch processing won’t cut it.

Dataflow supports low-latency data streaming so you can:

If you’re building dashboards or triggering fraud alerts, Dataflow’s streaming mode helps you stay ahead of the curve.

Dirty data breaks systems. But cleaning it at scale is often slow and expensive.

With Dataflow, you can:

It’s a smart way to improve data quality without writing and managing endless scripts.

You can’t build smart systems with dumb data pipelines.

Dataflow powers ML workflows by letting you:

While Dataflow doesn’t train models directly, it handles the heavy lifting that makes model development faster and easier.

ETL jobs are the backbone of data infrastructure, but they’re rarely cost-efficient.

Dataflow helps by letting you:

You get continuous processing for streaming or scheduled batch runs, with autoscaling baked in.

Predicting and controlling Google Dataflow costs shouldn’t feel like chasing a moving target. But without visibility into usage, even small inefficiencies scale fast and expensive.

This guide broke down how Dataflow pricing works, what drives your costs, and how to estimate smarter. From compute and shuffle to streaming and persistent disks, every piece matters when your bill arrives.

That’s where Sedai comes in.

We help you run Dataflow jobs efficiently, avoid overprovisioning, and automatically cut waste, without extra effort.

Ready to stop overspending on Dataflow?

Sedai gives you the automation and insights to stay optimized without slowing down.

Compute resources like vCPU and memory, along with shuffle operations and streaming data processing, are key cost drivers.

Use Google Cloud's pricing calculator, the cost tab in Cloud Console, or export billing data to BigQuery for detailed analysis.

Yes, Dataflow pricing can vary by region, especially for resources like persistent disk and streaming engine.

You can rightsize workers, minimize shuffle, use FlexRS or preemptible VMs, and Sedai can automate all of this safely.

Yes. Sedai applies real-time, performance-safe optimizations that reduce your Dataflow spend without any manual effort.

November 20, 2025

November 21, 2025

This blog breaks down Google Dataflow pricing by highlighting key cost drivers like compute, shuffle, and disk usage. It shows you how to estimate and control costs using Google Cloud tools and how Sedai goes further with autonomous cost optimization.

Cloud data processing isn’t just about moving data from point A to point B; it’s about doing it fast, reliably, and without burning a hole in your budget. But with unpredictable usage patterns, long-running jobs, and scaling complexity, keeping costs in check often feels like shooting in the dark.

That’s where Google Dataflow steps in. It gives you a fully managed, autoscaling stream and batch processing service with detailed, per-second billing so you can process massive datasets efficiently and control costs at every stage.

Let’s break down what Google Dataflow is, when it makes sense to use it, and how it actually works.

You don’t have time to babysit data pipelines. When streaming jobs stall, batch jobs lag, or infrastructure spikes costs overnight, it’s more than a hiccup; it’s hours lost, SLAs missed, and on-call stress you didn’t need.

Google Dataflow is a fully managed stream and batch data processing service built to take that pressure off. It autoscales your resources, handles failures behind the scenes, and charges only for what you use, no pre-provisioning, no tuning guesswork. You write the pipeline logic, and it takes care of the rest.

Coming up: When to use Dataflow and how it actually works behind the scenes.

When you’re under pressure to process massive data volumes quickly, without babysitting infrastructure or blowing your budget, Google Dataflow gives you room to breathe. It’s a fully managed, no-ops stream and batch processing service that lets you focus on what matters: building reliable pipelines that scale with your data.

Here’s how it delivers across your most critical needs.

Google Dataflow shines when you’re dealing with high-throughput pipelines, real-time event streams, or complex batch jobs. It’s a go-to for use cases where data velocity, volume, and flexibility all matter at once.

Use Dataflow for:

No clusters, no manual scaling, no babysitting, just code, deploy, and move on.

Google Dataflow is built for modern data operations. You don’t have time for sluggish processing or rigid infrastructure, so it gives you:

It’s designed for the way you work, not the other way around.

At its core, Dataflow runs on Apache Beam’s unified model. You build a pipeline using Beam SDKs, and Dataflow handles the orchestration, scaling resources, optimizing execution, and maintaining fault tolerance.

Here’s the typical flow:

Whether it’s continuous data streams or periodic batch loads, Dataflow handles the heavy lifting behind the scenes.

Up next: let’s break down the core pricing components that actually drive your Google Dataflow costs.

If you're seeing unpredictable spikes in your Dataflow bills, it’s not random, it’s structural. Google Dataflow pricing breaks down into several moving parts, and understanding each one is key to keeping your cloud spend under control. Here's how your cost adds up across the pipeline.

Google Dataflow charges per second based on the actual compute power your jobs consume. That means:

This pricing structure is efficient, but only if your pipeline is properly tuned. Over-provisioned workers or idle processing? You’ll pay for that too.

Every worker uses disk space. You’re billed for persistent disks attached to workers, and you can choose between:

Choosing the right disk type depends on your pipeline’s workload, but poor alignment here often results in both overpayment and underperformance.

Data movement within your pipeline, often invisible during development, becomes very visible on your billing dashboard.

Designing pipelines that minimize shuffle and streamline data paths isn’t just good practice, it’s a cost-cutting strategy.

Dataflow Prime introduces DCUs (Data Compute Units), a bundled, simplified way to price compute, memory, and storage as one unit.

It’s streamlined, but not always cheaper, Prime works best when efficiency is prioritized over raw savings.

If you're processing sensitive or regulated data, you can run your pipeline on Confidential VMs, offering hardware-based memory encryption and isolated environments.

Unless security is a top priority, Confidential VMs can quietly increase your compute bill without adding much value.

Each component plays a role in how your final Dataflow bill is shaped. Next, let’s look at how Google’s pricing models and regional differences affect those numbers.

Predicting cloud costs is already hard. But when you’re running high-throughput data pipelines, one unexpected spike can leave you explaining a bill no one saw coming. Google Dataflow offers several pricing models and regional rates to help you stay in control, if you know how to use them.

Let’s break them down so you can choose what works best for your budget and performance needs.

If your workloads are unpredictable or seasonal, the pay-as-you-go model gives you full flexibility. You’re billed by the second for:

This model works well for teams still experimenting or scaling gradually. But the freedom comes at a cost, it’s the most expensive option on a per-hour basis.

When you know what compute resources you’ll need long-term, committed use discounts give you up to 70% off standard pricing. You commit to one- or three-year plans, covering:

This model is ideal for production pipelines with steady, predictable throughput. You pay less, and the savings compound over time.

Need to run batch jobs or flexible workloads at rock-bottom prices? Spot VMs offer 60–91% discounts, with the tradeoff that they can be terminated at any time. Great for:

Just don’t run anything mission-critical on them unless you’ve built for interruptions.

You can test the waters with Google Cloud’s free tier. It includes:

The “always free” services are great for early-stage development or evaluation. But the moment you go over the limits, you’re back on pay-as-you-go rates, so watch your usage closely.

Most Google Cloud services follow global pricing, but not all. Some services (like network egress or disk storage) may vary depending on the region. Picking the right region for your workloads can shave off real dollars without impacting performance.

If you’re processing batch pipelines that don’t need instant results, FlexRS (Flexible Resource Scheduling) helps reduce costs by using lower-priority resources with a delay tolerance. It’s a smart option when speed isn’t your #1 priority but budget is.

Next, we’ll walk through how to estimate your Google Dataflow costs using the console, CLI, and billing tools.

When Dataflow costs spike, it’s usually after the job runs, and by then, it’s too late. You need ways to estimate costs upfront, break them down by component, and track actual usage over time. Here's how to make sure you don’t get blindsided by your next pipeline run.

The Cost tab inside the Cloud Console gives you live cost visibility during and after a job run.

Best for: spotting issues in live or recently completed jobs.

Before your job runs, use the Google Cloud Pricing Calculator to model expected costs.

Best for: forecasting budget impact and planning capacity before deployment.

Want more control than the calculator? Use the gcloud CLI or build your own formulas based on GCEU pricing units.

Best for: custom estimates, power users, and teams running cost modeling at scale.

Estimates are good, but actuals are better. Export billing data to BigQuery for in-depth analysis.

Best for: large-scale cost visibility, finance tracking, and cost allocation by project or environment.

These tools help you move from guesswork to accurate cost forecasting before and after your Dataflow jobs run.

Also read: Cloud Automation to understand how autonomous systems like Sedai automate cost and performance management in real time.

You shouldn’t have to wait for a surprise bill to find out a single Dataflow pipeline quietly burned through thousands. Cost overruns in stream and batch processing happen fast, especially with autoscaling, retries, and long-running jobs. That’s why real-time monitoring and control aren’t optional, they’re critical.

Google Cloud Monitoring offers detailed insights into how your pipelines are performing, and what they’re costing, down to the hour.

You get real-time dashboards that break down:

You can slice this data by job name, region, resource type, or time window, whether you want a top-down view or detailed forensic analysis. This granularity is especially useful when you're trying to justify optimizations or answer a finance team that’s asking, “Why did costs double yesterday?”

Monitoring is only half the battle. Google Dataflow integrates with Cloud Monitoring to let you set cost or usage thresholds, so you’re not just watching costs, you’re actively managing them.

You can configure:

These alerts help you catch issues like inefficient code paths, oversized workers, or stuck streaming jobs before they run wild.

Want to dive deeper? Export billing data to BigQuery for advanced queries. This is perfect for:

You can also correlate logs from Dataflow jobs with resource metrics to pinpoint what caused a specific spike, whether it was a malformed input, a bug in user code, or an unoptimized join operation.

Monitoring isn’t just for visibility, it’s your first step toward true cost optimization. When paired with autoscaling, preemptible VMs, or autonomous platforms like Sedai, these signals become actionable triggers to automatically improve efficiency, without waiting for human intervention.

Real control comes from real-time data + automated action. That’s how you prevent waste, reduce risk, and keep cloud costs aligned with business goals.

Next up: Let’s look at the most effective cost optimization strategies you can use to lower your Google Dataflow costs, without sacrificing performance.

If you're running Google Dataflow pipelines without tuning for cost, you're likely burning money you don’t need to. Most teams overprovision by default, run jobs at peak times, and leave autoscaling and monitoring half-configured. That’s not sustainable. Here’s how to stop the silent drain and build pipelines that are smart, fast, and efficient, without wasting a cent.

Dataflow costs stack up fast when your pipelines are oversized or your resources aren’t matched to the job.

Sedai’s autonomous system goes a step further by analyzing real-time performance and cost data to rightsize your workers continuously, so you stop overpaying without lifting a finger.

Batch jobs don’t need instant results, they need to be cheap and efficient. FlexRS (Flexible Resource Scheduling) is built exactly for that.

Run non-urgent jobs overnight or during off-peak hours. It’s an easy win for teams running regular ETL jobs or model training that doesn’t need real-time results.

Data shuffle in Dataflow is like a silent budget killer, it spikes your costs without showing up until the bill hits.

Sedai helps you identify shuffle-heavy steps in your pipeline, then suggests or automatically applies restructuring options, saving both compute and money.

Don’t miss the savings built right into Google Cloud’s pricing models.

Take five minutes to review your billing dashboard, odds are you’re leaving 20–30% in discounts untouched.

The way you set up your job matters as much as how it's coded.

This is where Sedai really shines, it uses historical patterns, workload signatures, and real-time metrics to fine-tune configurations dynamically, so you’re not guessing your way to optimization.

Smart optimization isn’t about tweaking settings once and hoping for the best. It’s about building pipelines that evolve with your workloads.

Also read: Top Cloud Cost Optimization Tools in 2025

Google Dataflow isn’t just flexible, it’s built to handle real-world, high-impact scenarios at scale. Whether you’re cleaning messy datasets or running real-time streaming jobs, Dataflow gives you the tools to act fast, stay efficient, and stay in control of costs.

Real-Time Analytics and Streaming

When every second counts, batch processing won’t cut it.

Dataflow supports low-latency data streaming so you can:

If you’re building dashboards or triggering fraud alerts, Dataflow’s streaming mode helps you stay ahead of the curve.

Dirty data breaks systems. But cleaning it at scale is often slow and expensive.

With Dataflow, you can:

It’s a smart way to improve data quality without writing and managing endless scripts.

You can’t build smart systems with dumb data pipelines.

Dataflow powers ML workflows by letting you:

While Dataflow doesn’t train models directly, it handles the heavy lifting that makes model development faster and easier.

ETL jobs are the backbone of data infrastructure, but they’re rarely cost-efficient.

Dataflow helps by letting you:

You get continuous processing for streaming or scheduled batch runs, with autoscaling baked in.

Predicting and controlling Google Dataflow costs shouldn’t feel like chasing a moving target. But without visibility into usage, even small inefficiencies scale fast and expensive.

This guide broke down how Dataflow pricing works, what drives your costs, and how to estimate smarter. From compute and shuffle to streaming and persistent disks, every piece matters when your bill arrives.

That’s where Sedai comes in.

We help you run Dataflow jobs efficiently, avoid overprovisioning, and automatically cut waste, without extra effort.

Ready to stop overspending on Dataflow?

Sedai gives you the automation and insights to stay optimized without slowing down.

Compute resources like vCPU and memory, along with shuffle operations and streaming data processing, are key cost drivers.

Use Google Cloud's pricing calculator, the cost tab in Cloud Console, or export billing data to BigQuery for detailed analysis.

Yes, Dataflow pricing can vary by region, especially for resources like persistent disk and streaming engine.

You can rightsize workers, minimize shuffle, use FlexRS or preemptible VMs, and Sedai can automate all of this safely.

Yes. Sedai applies real-time, performance-safe optimizations that reduce your Dataflow spend without any manual effort.