Unlock the Full Value of FinOps

By enabling safe, continuous optimization under clear policies and guardrails

November 19, 2025

November 20, 2025

November 19, 2025

November 20, 2025

ECS streamlines container operations with AWS-native automation, whereas EKS brings the full power and portability of upstream Kubernetes to modern cloud architectures. Fargate adds serverless scaling across both, ideal for unpredictable or short-lived workloads. Teams prioritizing simplicity lean toward ECS, while those embracing multi-cloud, GitOps, or advanced platform engineering often choose EKS. The right choice aligns directly with your organization’s maturity, performance needs, and long-term cloud roadmap.

For engineering leaders in 2025, choosing between Amazon ECS and Amazon EKS (K8s flavor) is a decision that defines how your teams operate, scale, and spend for years ahead.

Both services run containers on AWS, but their philosophies differ sharply: ECS favors simplicity and AWS-native control, while EKS delivers Kubernetes flexibility with added operational overhead.

That difference matters more than ever. According to the Cloud Native Computing Foundation’s 2024 Annual Survey, 93 percent of organizations now use or are evaluating Kubernetes in production, highlighting its dominance as the enterprise standard for container orchestration.

Yet, as McKinsey & Company (2024) reports, most organizations still have 10 to 20 percent of untapped cloud-cost savings that could be unlocked through smarter optimization and automation strategies, exactly the layer where container-platform choice, governance, and intelligent tooling intersect.

This guide explores how ECS and EKS differ in architecture, complexity, and cost behavior. Whether you’re modernizing legacy workloads, scaling microservices, or building a multi-cloud strategy, this analysis will help you make a confident, data-driven decision.

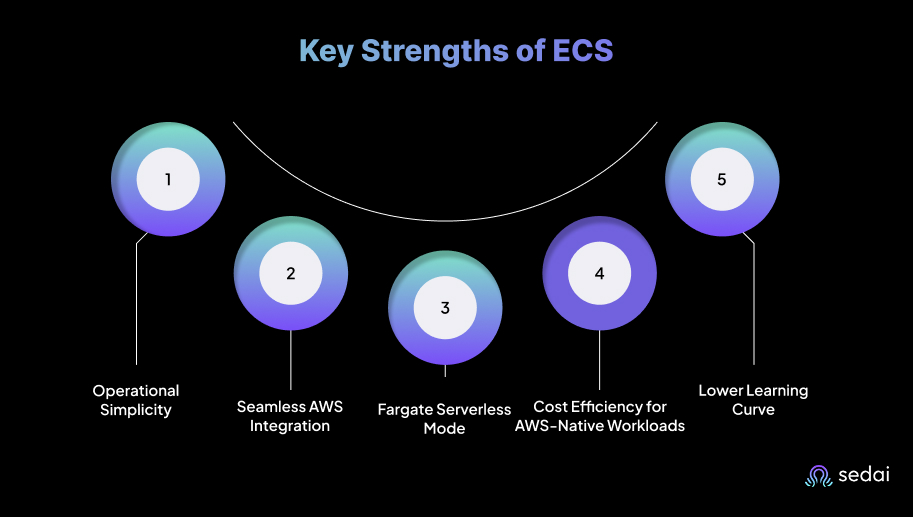

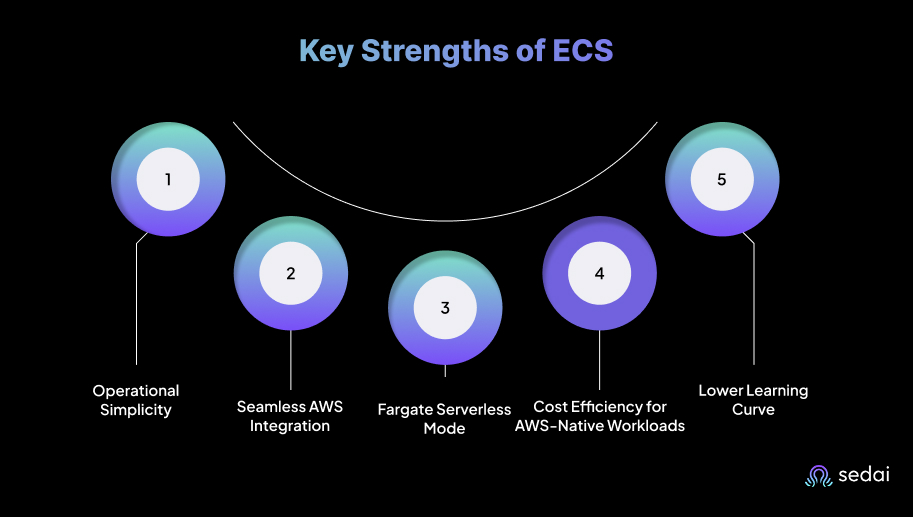

Amazon Elastic Container Service (ECS) is AWS’s fully managed container orchestration service designed for simplicity, speed, and tight AWS integration. It eliminates the operational overhead of managing Kubernetes clusters by abstracting scheduling, scaling, and networking into AWS-managed constructs.

ECS supports two launch types: EC2 (for managed clusters running on EC2 instances) and Fargate (for serverless containers with no infrastructure management). For most teams, ECS represents AWS’s opinionated path to container orchestration: tightly coupled, highly automated, and optimized for organizations that prioritize operational ease over ecosystem flexibility.

ECS abstracts away cluster management, version upgrades, and control plane tuning. Engineering teams can deploy containers directly using task definitions and services, allowing DevOps teams to focus on applications rather than cluster lifecycle operations.

Every aspect of ECS, from IAM roles and VPC networking to CloudWatch metrics and Load Balancers, fits natively into AWS’s ecosystem. That means less time wiring infrastructure and fewer custom scripts.

With ECS on Fargate, you run containers without managing EC2 instances. This is ideal for sporadic, short-lived workloads where compute demand fluctuates. Billing is purely per vCPU and memory per second: predictable and maintenance-free.

ECS’s pricing model is simpler than EKS, with no control plane fee and no external dependency management. For teams running steady workloads entirely inside AWS, ECS usually delivers a lower total cost of ownership (TCO).

ECS’s declarative configuration is far simpler than Kubernetes manifests. Teams without deep K8s expertise can deploy production workloads faster, often within days.

ECS is AWS-proprietary. Workloads can’t natively migrate to another cloud or an on-prem Kubernetes cluster without refactoring.

Unlike Kubernetes, ECS lacks a broad community ecosystem of open-source tools, operators, and controllers.

Advanced workloads requiring custom schedulers, service meshes, or multi-cluster orchestration often outgrow ECS’s simplicity.

While ECS is AWS’s opinionated choice for container management, EKS represents the other end of the spectrum: Kubernetes-native orchestration with full control and ecosystem extensibility.

Amazon Elastic Kubernetes Service (EKS) is AWS’s managed Kubernetes platform, a service that gives you the flexibility and power of upstream Kubernetes while offloading the burden of running the control plane.

Where ECS offers simplicity, EKS offers standardization. It’s the Kubernetes “flavor” for AWS teams who want to run cloud-native workloads with the same tooling, APIs, and manifests used across the open-source Kubernetes ecosystem while retaining AWS’s operational reliability and security.

EKS is ideal for organizations that value portability, multi-cloud optionality, or hybrid deployments, from AWS cloud to on-prem data centers or even other cloud providers via EKS Anywhere.

EKS runs upstream Kubernetes without modifications. This ensures full compatibility with kubectl, Helm, operators, and the CNCF ecosystem, allowing teams to migrate workloads or extend their clusters with minimal friction.

AWS operates and scales the Kubernetes control plane for you, including the API server, etcd, and key management components, ensuring resilience across multiple Availability Zones. You pay a fixed control plane fee ($0.10/hour per cluster), decoupled from compute costs.

EKS integrates seamlessly with tools like ArgoCD, Prometheus, Istio, and Karpenter, while still utilizing AWS’s managed services (IAM, CloudWatch, ALB Ingress Controller, ECR). This duality: open-source flexibility plus AWS integration is a major draw for platform engineering teams.

With EKS Anywhere, organizations can run the same Kubernetes distribution on-prem or in other clouds, maintaining consistent cluster operations and governance. It’s a strong option for regulated or hybrid environments.

EKS exposes the full Kubernetes API surface, letting teams fine-tune networking (CNI), autoscaling (HPA/VPA), scheduling, and policy enforcement, capabilities that ECS intentionally hides for simplicity.

Running EKS means managing node groups, networking plugins, IAM roles for service accounts (IRSA), and cluster lifecycle updates. It demands Kubernetes fluency from your team or strong automation to bridge the skills gap.

In addition to the control plane fee, EKS introduces costs for worker nodes (EC2 or Fargate), storage, and network egress. Without tagging discipline or cost governance, total cost can drift fast across namespaces and clusters.

Platform engineering teams often underestimate the day-2 operational load: cluster upgrades, add-on compatibility, and observability setup can consume significant engineering bandwidth.

AWS Fargate is the serverless compute engine that runs containers without provisioning or managing servers. Instead of managing EC2 instances, teams define CPU, memory, and network requirements per task or pod, and Fargate handles the rest, provisioning, scaling, patching, and isolation. This makes it an ideal foundation for teams seeking agility without infrastructure overhead.

Fargate pricing is based on:

This pay-per-use model makes short-lived, bursty, or unpredictable workloads ideal candidates.

Fargate simplifies orchestration by removing the infrastructure layer, but it’s not a one-size-fits-all solution. Engineering leaders often blend EC2 + Fargate within ECS or EKS to strike the right balance between cost control, elasticity, and performance consistency.

Also Read: Understanding AWS Fargate: Features and Pricing

When AWS first launched ECS and later EKS, the goal wasn’t to replace one with the other. It was to offer two orchestration models for different operating philosophies. For engineering leaders, the key isn’t “which is better,” but which aligns with your organization’s skill set, governance maturity, and workload patterns.

At first glance, ECS looks cheaper and often is, but that’s not the whole picture. The difference lies in what you’re paying for.

ECS minimizes operational overhead. Ideal for small DevOps teams or fast-moving startups. EKS rewards experienced platform engineering teams capable of automating cluster management and observability through IaC.

Implication:

Both services integrate with AWS Fargate, allowing serverless scaling. However, EKS + Fargate offers flexibility (per-pod scheduling), while ECS + Fargate offers simplicity (per-task scheduling).

If your FinOps/governance maturity is limited and you want simpler tagging, cost control, and less operational framing, ECS is a safer bet.

Both ECS and EKS integrate with AWS Fargate, offering serverless compute. The difference lies in control vs convenience. ECS wins for operational simplicity and predictable costs. EKS wins for Kubernetes ecosystem leverage and future-proof scalability.

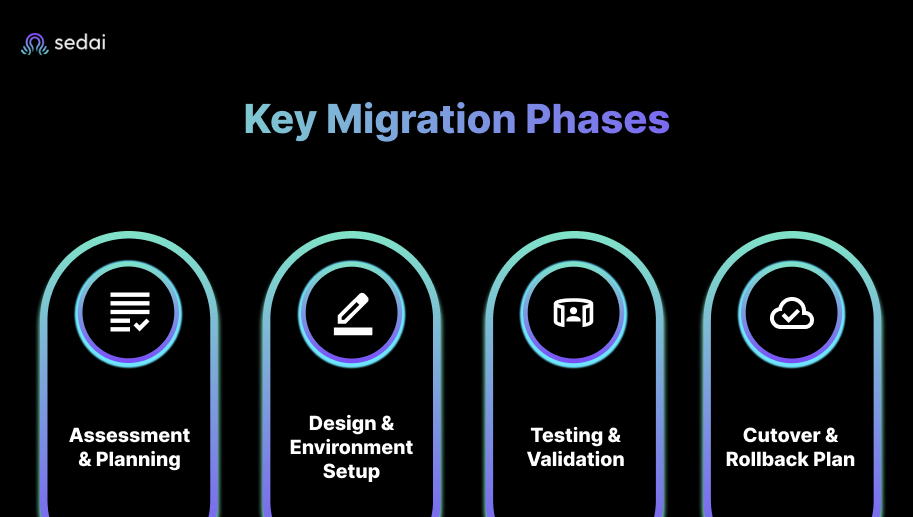

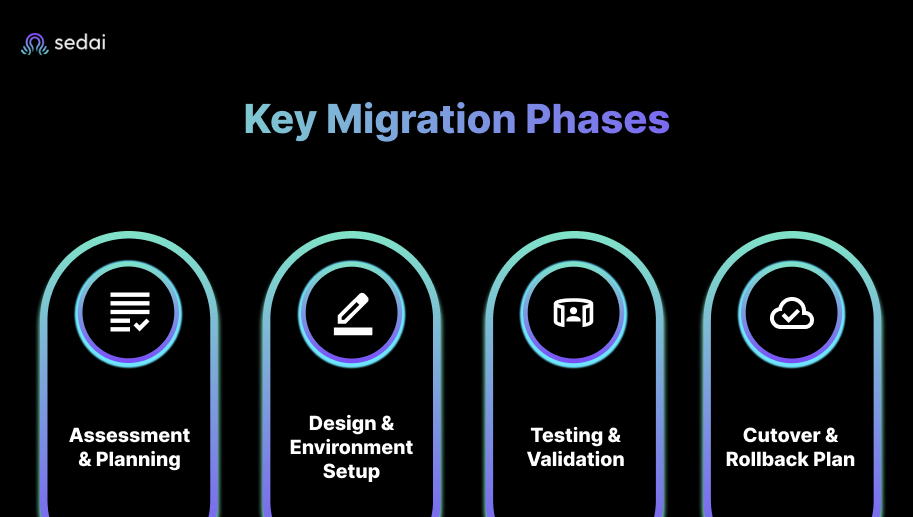

Migrating between ECS and EKS is an architectural realignment. Teams usually make this move when their workloads or organizational maturity evolve: ECS to simplify, EKS to scale and modernize. A successful migration balances performance, reliability, and cost while minimizing disruption to production environments.

Inventory workloads, dependencies, and integration points (databases, VPCs, IAM). Identify container image compatibility (Docker vs OCI) and network configuration gaps. Define SLAs and acceptable downtime for migration windows.

For EKS migration, create new clusters using eksctl or Terraform, configure networking (VPC, subnets, security groups), and integrate IAM roles. For ECS migration, define task definitions, target groups, and scaling policies. Standardize CI/CD pipelines to support both environments temporarily.

Run staging workloads in the new platform and validate service discovery, scaling, and monitoring configurations. Conduct load and chaos testing to verify resilience under real-world conditions. Test autoscaling, network policies, and observability stack integration.

Execute migration incrementally, workload by workload. Maintain parallel traffic routing using ALB or Route 53 weighted routing until confidence builds. Always maintain a rollback pipeline to the original cluster or service.

Even the best-designed container architectures can underperform if execution and governance aren’t aligned. Engineering leaders often discover that inefficiencies, not infrastructure limits, are what inflate costs or degrade reliability. Understanding these pitfalls early helps teams avoid months of reactive fixes.

Many teams evaluate ECS and EKS only by compute costs, overlooking EKS’s control-plane fee and operational overhead. The real cost difference often emerges from underutilized resources or inefficient autoscaling configurations. Regular cost audits, right-sizing, and workload-level tagging prevent unnoticed budget creep.

Autoscaling is a top source of instability. On ECS, scaling policies based solely on CPU can miss memory or I/O spikes. On EKS, poorly tuned HPA or Cluster Autoscaler settings can cause oscillations, leading to frequent restarts or resource starvation. Always tune thresholds using historical metrics and set conservative cooldown periods to stabilize scaling behavior.

Fargate simplifies operations but can inflate long-term costs for 24/7 workloads. Teams often deploy everything to Fargate for convenience, only to discover it’s more expensive than EC2-based clusters. The right balance: use Fargate for unpredictable or short-lived tasks, and EC2 for stable, consistent workloads.

EKS introduces Kubernetes RBAC, PodSecurityPolicies, and network policies, which are powerful but easy to misconfigure. Missing RBAC roles or conflicting namespace policies can break deployments or open vulnerabilities. Use least-privilege access, centralize IAM-to-RBAC mapping, and enforce policies via Infrastructure as Code.

Without unified observability, teams react to incidents instead of preventing them. ECS provides native CloudWatch integration, while EKS requires AWS Managed Services for full-stack visibility. Establish baselines for latency, error rates, and resource utilization and link them directly to cost dashboards for full accountability.

Cloud cost optimization isn’t just a technical problem. It’s a process problem. Without ownership, idle resources and overprovisioned clusters multiply. Integrate FinOps principles early: enforce tagging standards, assign budgets per team or namespace, and use anomaly detection to flag deviations automatically.

The best-run organizations combine robust automation, proactive monitoring, and autonomous optimization tools to eliminate these pitfalls before they impact performance or cost.

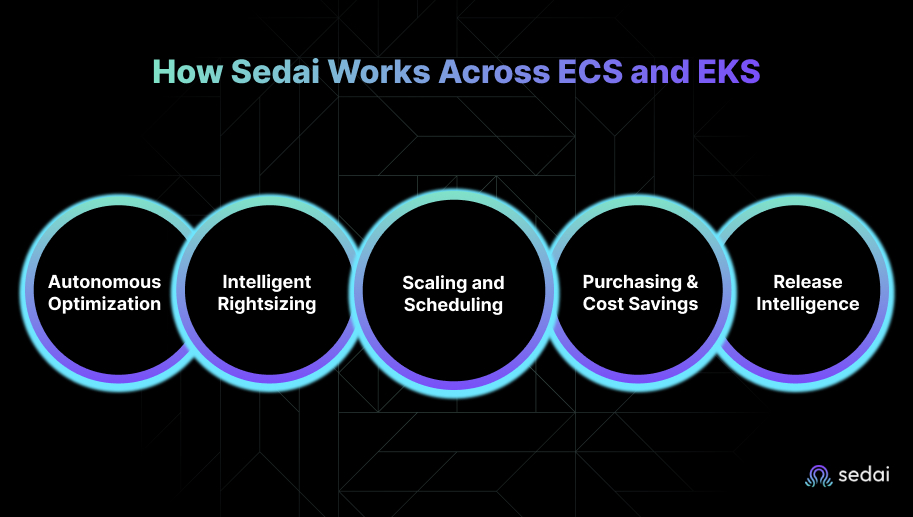

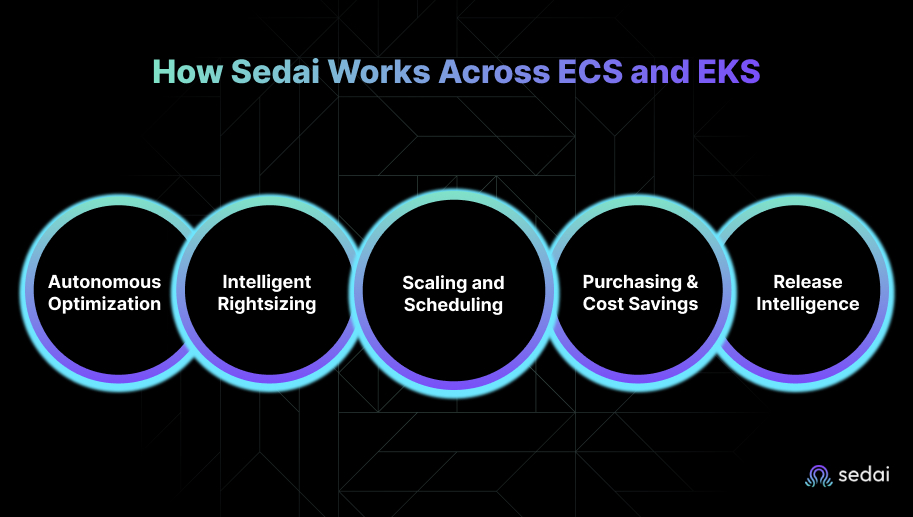

Modern container orchestration platforms like Amazon EKS and Amazon ECS provide immense capability, but maintaining cost efficiency, performance, and reliability at scale requires a new class of tooling. That’s where Sedai comes in: an autonomous cloud optimization platform designed to integrate directly into your container strategy.

Mode settings: Datapilot, Copilot, Autopilot: Teams can choose their comfort level: start with monitoring-only (Datapilot), then review recommendations (Copilot), and finally allow full autonomous execution (Autopilot).

Sedai is the only safe way to reduce cloud costs at enterprise scale. Across our fully deployed customers, we consistently achieve:

With Sedai in place, the choice of ECS vs EKS becomes less about “Which will we manage better?” and more about “Which do we need for functionality?” because Sedai will help manage the efficiency of either. Sedai turns your container platform into a self-optimizing system that continuously tunes itself for cost and performance, without human toil.

See how engineering teams measure tangible cost and performance gains with Sedai’s autonomous optimization platform: Calculate Your ROI.

For most engineering leaders, the real question isn’t ECS or EKS? It’s how to run containers efficiently, safely, and predictably over time. ECS offers simplicity and seamless AWS integration, while EKS delivers the flexibility and standardization of Kubernetes. But both face the same reality: cloud environments evolve faster than manual governance can keep up.

That’s why the smartest teams are shifting from reactive monitoring to autonomous optimization. Platforms like Sedai close the loop, continuously analyzing workload behavior, tuning compute and scaling decisions, and enforcing Smart SLOs to maintain performance without overspend.

Gain visibility into your AWS environment and optimize autonomously.

Yes, EKS generally costs more than ECS due to the managed control-plane fee of $0.10/hour (~$70/month per cluster) and the additional operational overhead of managing Kubernetes components. ECS, being AWS-native, doesn’t charge a cluster fee; you only pay for the compute, storage, and networking used.

Absolutely. AWS Fargate is a serverless compute engine compatible with both ECS and EKS. It removes the need to manage EC2 instances and automatically scales container workloads. In ECS, Fargate provides the simplest, AWS-native experience; in EKS, it enables Kubernetes workloads to run serverlessly. Sedai further enhances both by dynamically tuning Fargate resource allocations and scaling behaviors for optimal performance and cost.

ECS is purpose-built for AWS and excels in simplicity and integration with AWS services. AKS (Azure Kubernetes Service), on the other hand, is Kubernetes-native and suited for hybrid or Azure-first environments. Organizations adopting multi-cloud typically standardize on Kubernetes-based platforms (EKS and AKS) for portability, while using Sedai to maintain a unified optimization layer across both clouds.

The safest migration path is incremental and reversible. Start with an assessment of workloads, dependencies, and SLA requirements, then migrate one service at a time using Infrastructure-as-Code tools. Validate in staging, monitor autoscaling, and maintain rollback routes using Route 53 weighted traffic. For ECS to EKS, prepare for Kubernetes RBAC and networking differences; for EKS to ECS, simplify CI/CD and scaling configurations.

November 20, 2025

November 19, 2025

ECS streamlines container operations with AWS-native automation, whereas EKS brings the full power and portability of upstream Kubernetes to modern cloud architectures. Fargate adds serverless scaling across both, ideal for unpredictable or short-lived workloads. Teams prioritizing simplicity lean toward ECS, while those embracing multi-cloud, GitOps, or advanced platform engineering often choose EKS. The right choice aligns directly with your organization’s maturity, performance needs, and long-term cloud roadmap.

For engineering leaders in 2025, choosing between Amazon ECS and Amazon EKS (K8s flavor) is a decision that defines how your teams operate, scale, and spend for years ahead.

Both services run containers on AWS, but their philosophies differ sharply: ECS favors simplicity and AWS-native control, while EKS delivers Kubernetes flexibility with added operational overhead.

That difference matters more than ever. According to the Cloud Native Computing Foundation’s 2024 Annual Survey, 93 percent of organizations now use or are evaluating Kubernetes in production, highlighting its dominance as the enterprise standard for container orchestration.

Yet, as McKinsey & Company (2024) reports, most organizations still have 10 to 20 percent of untapped cloud-cost savings that could be unlocked through smarter optimization and automation strategies, exactly the layer where container-platform choice, governance, and intelligent tooling intersect.

This guide explores how ECS and EKS differ in architecture, complexity, and cost behavior. Whether you’re modernizing legacy workloads, scaling microservices, or building a multi-cloud strategy, this analysis will help you make a confident, data-driven decision.

Amazon Elastic Container Service (ECS) is AWS’s fully managed container orchestration service designed for simplicity, speed, and tight AWS integration. It eliminates the operational overhead of managing Kubernetes clusters by abstracting scheduling, scaling, and networking into AWS-managed constructs.

ECS supports two launch types: EC2 (for managed clusters running on EC2 instances) and Fargate (for serverless containers with no infrastructure management). For most teams, ECS represents AWS’s opinionated path to container orchestration: tightly coupled, highly automated, and optimized for organizations that prioritize operational ease over ecosystem flexibility.

ECS abstracts away cluster management, version upgrades, and control plane tuning. Engineering teams can deploy containers directly using task definitions and services, allowing DevOps teams to focus on applications rather than cluster lifecycle operations.

Every aspect of ECS, from IAM roles and VPC networking to CloudWatch metrics and Load Balancers, fits natively into AWS’s ecosystem. That means less time wiring infrastructure and fewer custom scripts.

With ECS on Fargate, you run containers without managing EC2 instances. This is ideal for sporadic, short-lived workloads where compute demand fluctuates. Billing is purely per vCPU and memory per second: predictable and maintenance-free.

ECS’s pricing model is simpler than EKS, with no control plane fee and no external dependency management. For teams running steady workloads entirely inside AWS, ECS usually delivers a lower total cost of ownership (TCO).

ECS’s declarative configuration is far simpler than Kubernetes manifests. Teams without deep K8s expertise can deploy production workloads faster, often within days.

ECS is AWS-proprietary. Workloads can’t natively migrate to another cloud or an on-prem Kubernetes cluster without refactoring.

Unlike Kubernetes, ECS lacks a broad community ecosystem of open-source tools, operators, and controllers.

Advanced workloads requiring custom schedulers, service meshes, or multi-cluster orchestration often outgrow ECS’s simplicity.

While ECS is AWS’s opinionated choice for container management, EKS represents the other end of the spectrum: Kubernetes-native orchestration with full control and ecosystem extensibility.

Amazon Elastic Kubernetes Service (EKS) is AWS’s managed Kubernetes platform, a service that gives you the flexibility and power of upstream Kubernetes while offloading the burden of running the control plane.

Where ECS offers simplicity, EKS offers standardization. It’s the Kubernetes “flavor” for AWS teams who want to run cloud-native workloads with the same tooling, APIs, and manifests used across the open-source Kubernetes ecosystem while retaining AWS’s operational reliability and security.

EKS is ideal for organizations that value portability, multi-cloud optionality, or hybrid deployments, from AWS cloud to on-prem data centers or even other cloud providers via EKS Anywhere.

EKS runs upstream Kubernetes without modifications. This ensures full compatibility with kubectl, Helm, operators, and the CNCF ecosystem, allowing teams to migrate workloads or extend their clusters with minimal friction.

AWS operates and scales the Kubernetes control plane for you, including the API server, etcd, and key management components, ensuring resilience across multiple Availability Zones. You pay a fixed control plane fee ($0.10/hour per cluster), decoupled from compute costs.

EKS integrates seamlessly with tools like ArgoCD, Prometheus, Istio, and Karpenter, while still utilizing AWS’s managed services (IAM, CloudWatch, ALB Ingress Controller, ECR). This duality: open-source flexibility plus AWS integration is a major draw for platform engineering teams.

With EKS Anywhere, organizations can run the same Kubernetes distribution on-prem or in other clouds, maintaining consistent cluster operations and governance. It’s a strong option for regulated or hybrid environments.

EKS exposes the full Kubernetes API surface, letting teams fine-tune networking (CNI), autoscaling (HPA/VPA), scheduling, and policy enforcement, capabilities that ECS intentionally hides for simplicity.

Running EKS means managing node groups, networking plugins, IAM roles for service accounts (IRSA), and cluster lifecycle updates. It demands Kubernetes fluency from your team or strong automation to bridge the skills gap.

In addition to the control plane fee, EKS introduces costs for worker nodes (EC2 or Fargate), storage, and network egress. Without tagging discipline or cost governance, total cost can drift fast across namespaces and clusters.

Platform engineering teams often underestimate the day-2 operational load: cluster upgrades, add-on compatibility, and observability setup can consume significant engineering bandwidth.

AWS Fargate is the serverless compute engine that runs containers without provisioning or managing servers. Instead of managing EC2 instances, teams define CPU, memory, and network requirements per task or pod, and Fargate handles the rest, provisioning, scaling, patching, and isolation. This makes it an ideal foundation for teams seeking agility without infrastructure overhead.

Fargate pricing is based on:

This pay-per-use model makes short-lived, bursty, or unpredictable workloads ideal candidates.

Fargate simplifies orchestration by removing the infrastructure layer, but it’s not a one-size-fits-all solution. Engineering leaders often blend EC2 + Fargate within ECS or EKS to strike the right balance between cost control, elasticity, and performance consistency.

Also Read: Understanding AWS Fargate: Features and Pricing

When AWS first launched ECS and later EKS, the goal wasn’t to replace one with the other. It was to offer two orchestration models for different operating philosophies. For engineering leaders, the key isn’t “which is better,” but which aligns with your organization’s skill set, governance maturity, and workload patterns.

At first glance, ECS looks cheaper and often is, but that’s not the whole picture. The difference lies in what you’re paying for.

ECS minimizes operational overhead. Ideal for small DevOps teams or fast-moving startups. EKS rewards experienced platform engineering teams capable of automating cluster management and observability through IaC.

Implication:

Both services integrate with AWS Fargate, allowing serverless scaling. However, EKS + Fargate offers flexibility (per-pod scheduling), while ECS + Fargate offers simplicity (per-task scheduling).

If your FinOps/governance maturity is limited and you want simpler tagging, cost control, and less operational framing, ECS is a safer bet.

Both ECS and EKS integrate with AWS Fargate, offering serverless compute. The difference lies in control vs convenience. ECS wins for operational simplicity and predictable costs. EKS wins for Kubernetes ecosystem leverage and future-proof scalability.

Migrating between ECS and EKS is an architectural realignment. Teams usually make this move when their workloads or organizational maturity evolve: ECS to simplify, EKS to scale and modernize. A successful migration balances performance, reliability, and cost while minimizing disruption to production environments.

Inventory workloads, dependencies, and integration points (databases, VPCs, IAM). Identify container image compatibility (Docker vs OCI) and network configuration gaps. Define SLAs and acceptable downtime for migration windows.

For EKS migration, create new clusters using eksctl or Terraform, configure networking (VPC, subnets, security groups), and integrate IAM roles. For ECS migration, define task definitions, target groups, and scaling policies. Standardize CI/CD pipelines to support both environments temporarily.

Run staging workloads in the new platform and validate service discovery, scaling, and monitoring configurations. Conduct load and chaos testing to verify resilience under real-world conditions. Test autoscaling, network policies, and observability stack integration.

Execute migration incrementally, workload by workload. Maintain parallel traffic routing using ALB or Route 53 weighted routing until confidence builds. Always maintain a rollback pipeline to the original cluster or service.

Even the best-designed container architectures can underperform if execution and governance aren’t aligned. Engineering leaders often discover that inefficiencies, not infrastructure limits, are what inflate costs or degrade reliability. Understanding these pitfalls early helps teams avoid months of reactive fixes.

Many teams evaluate ECS and EKS only by compute costs, overlooking EKS’s control-plane fee and operational overhead. The real cost difference often emerges from underutilized resources or inefficient autoscaling configurations. Regular cost audits, right-sizing, and workload-level tagging prevent unnoticed budget creep.

Autoscaling is a top source of instability. On ECS, scaling policies based solely on CPU can miss memory or I/O spikes. On EKS, poorly tuned HPA or Cluster Autoscaler settings can cause oscillations, leading to frequent restarts or resource starvation. Always tune thresholds using historical metrics and set conservative cooldown periods to stabilize scaling behavior.

Fargate simplifies operations but can inflate long-term costs for 24/7 workloads. Teams often deploy everything to Fargate for convenience, only to discover it’s more expensive than EC2-based clusters. The right balance: use Fargate for unpredictable or short-lived tasks, and EC2 for stable, consistent workloads.

EKS introduces Kubernetes RBAC, PodSecurityPolicies, and network policies, which are powerful but easy to misconfigure. Missing RBAC roles or conflicting namespace policies can break deployments or open vulnerabilities. Use least-privilege access, centralize IAM-to-RBAC mapping, and enforce policies via Infrastructure as Code.

Without unified observability, teams react to incidents instead of preventing them. ECS provides native CloudWatch integration, while EKS requires AWS Managed Services for full-stack visibility. Establish baselines for latency, error rates, and resource utilization and link them directly to cost dashboards for full accountability.

Cloud cost optimization isn’t just a technical problem. It’s a process problem. Without ownership, idle resources and overprovisioned clusters multiply. Integrate FinOps principles early: enforce tagging standards, assign budgets per team or namespace, and use anomaly detection to flag deviations automatically.

The best-run organizations combine robust automation, proactive monitoring, and autonomous optimization tools to eliminate these pitfalls before they impact performance or cost.

Modern container orchestration platforms like Amazon EKS and Amazon ECS provide immense capability, but maintaining cost efficiency, performance, and reliability at scale requires a new class of tooling. That’s where Sedai comes in: an autonomous cloud optimization platform designed to integrate directly into your container strategy.

Mode settings: Datapilot, Copilot, Autopilot: Teams can choose their comfort level: start with monitoring-only (Datapilot), then review recommendations (Copilot), and finally allow full autonomous execution (Autopilot).

Sedai is the only safe way to reduce cloud costs at enterprise scale. Across our fully deployed customers, we consistently achieve:

With Sedai in place, the choice of ECS vs EKS becomes less about “Which will we manage better?” and more about “Which do we need for functionality?” because Sedai will help manage the efficiency of either. Sedai turns your container platform into a self-optimizing system that continuously tunes itself for cost and performance, without human toil.

See how engineering teams measure tangible cost and performance gains with Sedai’s autonomous optimization platform: Calculate Your ROI.

For most engineering leaders, the real question isn’t ECS or EKS? It’s how to run containers efficiently, safely, and predictably over time. ECS offers simplicity and seamless AWS integration, while EKS delivers the flexibility and standardization of Kubernetes. But both face the same reality: cloud environments evolve faster than manual governance can keep up.

That’s why the smartest teams are shifting from reactive monitoring to autonomous optimization. Platforms like Sedai close the loop, continuously analyzing workload behavior, tuning compute and scaling decisions, and enforcing Smart SLOs to maintain performance without overspend.

Gain visibility into your AWS environment and optimize autonomously.

Yes, EKS generally costs more than ECS due to the managed control-plane fee of $0.10/hour (~$70/month per cluster) and the additional operational overhead of managing Kubernetes components. ECS, being AWS-native, doesn’t charge a cluster fee; you only pay for the compute, storage, and networking used.

Absolutely. AWS Fargate is a serverless compute engine compatible with both ECS and EKS. It removes the need to manage EC2 instances and automatically scales container workloads. In ECS, Fargate provides the simplest, AWS-native experience; in EKS, it enables Kubernetes workloads to run serverlessly. Sedai further enhances both by dynamically tuning Fargate resource allocations and scaling behaviors for optimal performance and cost.

ECS is purpose-built for AWS and excels in simplicity and integration with AWS services. AKS (Azure Kubernetes Service), on the other hand, is Kubernetes-native and suited for hybrid or Azure-first environments. Organizations adopting multi-cloud typically standardize on Kubernetes-based platforms (EKS and AKS) for portability, while using Sedai to maintain a unified optimization layer across both clouds.

The safest migration path is incremental and reversible. Start with an assessment of workloads, dependencies, and SLA requirements, then migrate one service at a time using Infrastructure-as-Code tools. Validate in staging, monitor autoscaling, and maintain rollback routes using Route 53 weighted traffic. For ECS to EKS, prepare for Kubernetes RBAC and networking differences; for EKS to ECS, simplify CI/CD and scaling configurations.