Unlock the Full Value of FinOps

By enabling safe, continuous optimization under clear policies and guardrails

December 4, 2024

March 12, 2022

December 4, 2024

March 12, 2022

Competing in today’s global market requires agility to deliver value fast. It also means maintaining the highest levels of reliability, availability, and security for your entire cloud. Juggling innovation alongside operations has reduced their ability to scale for many teams.

That’s where AWS Lambdas can help. With a serverless-first strategy, your team can focus on customer needs instead of the underlying infrastructure that applications run on. This allows you to be more agile as a team without sacrificing performance or security – and lower your cloud costs.

Serverless functions do everything on-demand — starting when needed and stopping upon completion. Certain events you configure (like a file upload or API request) trigger the serverless function to start. When the action finishes, the server returns to an idle state until another trigger event occurs.

Because you only pay for the short time that the cloud service provider is executing the action, you’re able to save money — in the absence of provisioned concurrency (which I’ll talk about more shortly) when the server is idle, and you don’t incur charges. This means you can more easily scale your application to adjust to spikes in traffic and concurrent users without negatively impacting performance.

Serverless development puts the onus of management and provisioning onto the cloud service provider, giving your team the time to focus on development and improving speed and quality — which is especially important for lean development teams.

While the cost savings, time savings, and scalability serverless provides are attractive, serverless environments come with their own sets of challenges, such as cold starts and distributed tracing.

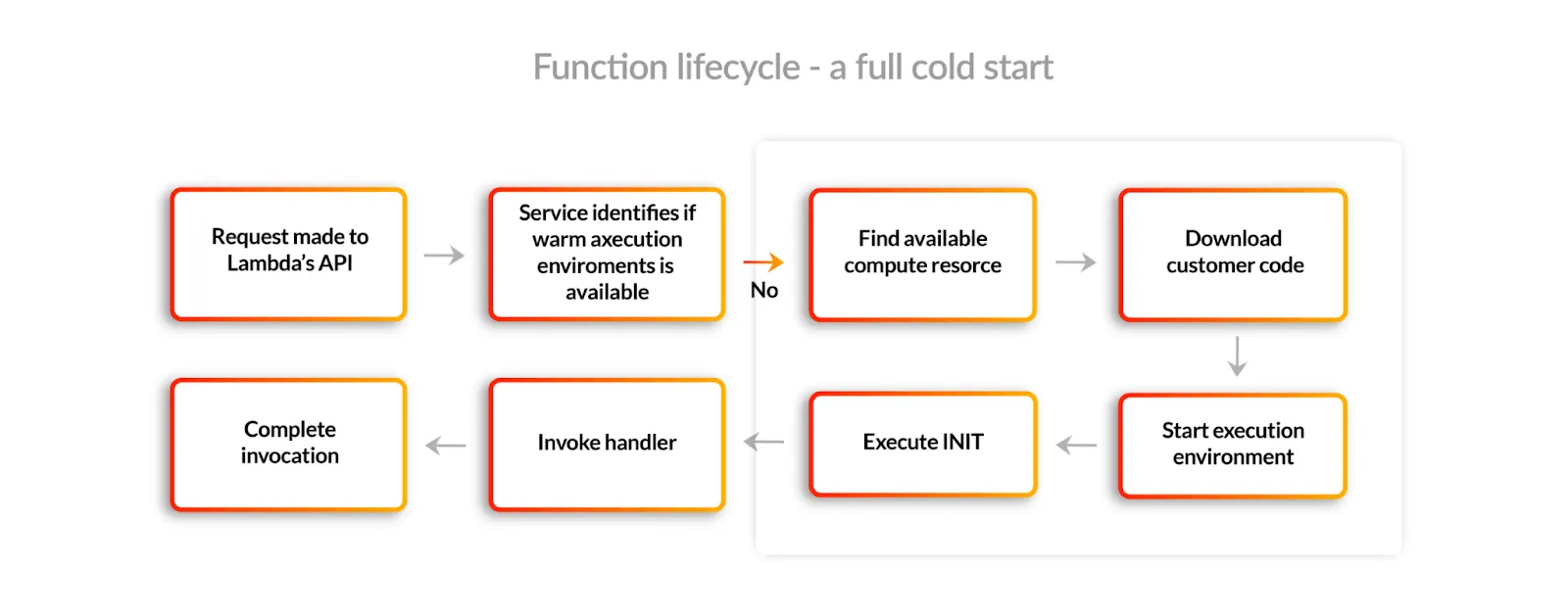

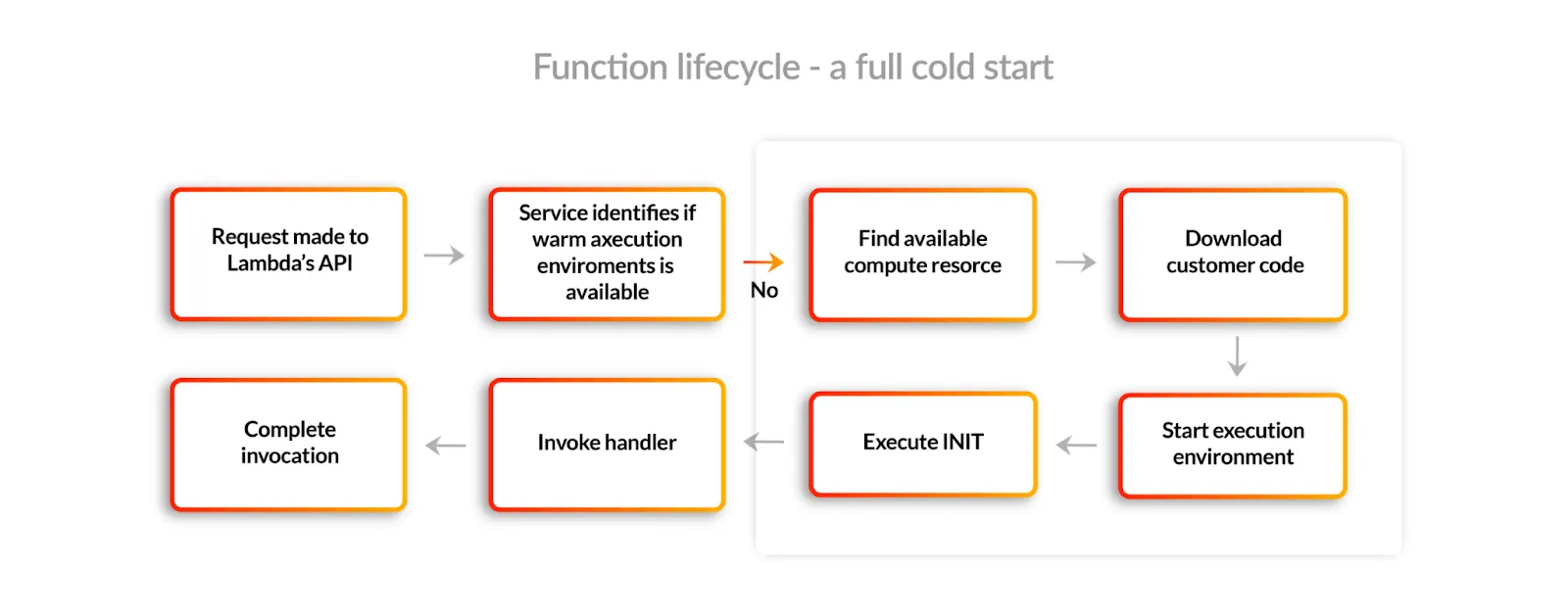

Cold starts are a common concern when it comes to serverless development. Because the server is idle except for when a request is made, or a function is called, spinning up a new microcontainer happens from scratch — which can cause delays. Those extra milliseconds can add up and ultimately negatively affect your overall application performance and user experience.

Distributed tracing is also a complicated side effect of serverless. Pinpointing the source of an error, clarifying how data is flowing in the application, and identifying ways to improve application performance are all critical factors. With so many small, standalone applications, it’s nearly impossible to practice tracing and identify where an issue began. And when you’re working with disparate cloud services and hundreds of Lambdas, you’ll need to invest in special tools to have any chance of effective tracking.

There are a few ways to get around cold starts.

The first option, which I don’t recommend, is to keep functions “warm” to ensure they are ready for immediate use when called. Warm-ups are achieved by using custom code or a third-party solution to provide dummy calls. While this may help speed your application, implementing and controlling it can be tricky. You have to add additional code to each function — and the additional calls can make logging unnecessarily cumbersome.

AWS launched provisioned concurrency in response to the limitations that warm-ups present. Instead of using custom code or adopting a third-party solution, AWS lets you configure warm instances without the need for code changes. You have to identify how many provisioned instances a particular function should have, and AWS will ensure that those instances are warm and ready for action.

Seems simple enough, right? Spoiler alert: provisioned concurrency also has downsides.

Because it is no longer usage-based, you can anticipate costs rising significantly. Much of the cost-effectiveness gained from adopting a serverless approach will be negated in provisioned concurrency charges.Provisioned concurrency is AWS’s solution to cold starts, but alone it still falls short of unlocking serverless’ full benefits.

With Sedai, you can still get all the benefits of serverless with provisioned concurrency when it’s intelligently managed for you. Sedai will adjust based on your seasonality, dependencies, traffic, and anything else it is seeing in the platform. Sedai will make sure if you are taking an operation, your rest of the dependencies aren’t negatively impacted.

The platform independently acts on your behalf to:

While infrastructure management has some challenges, Sedai can help overcome these. A serverless-first strategy is proven to increase team agility, and with Sedai your team can confidently take full advantage of serverless benefits.

Choosing serverless means choosing innovation, and in today’s fast-paced industry, that’s the only way to get ahead. Leave the infrastructure management to your cloud provider and free up your team’s time to focus on the application and deliver value.

Join our Slack community to learn more and start using Sedai for free today.

March 12, 2022

December 4, 2024

Competing in today’s global market requires agility to deliver value fast. It also means maintaining the highest levels of reliability, availability, and security for your entire cloud. Juggling innovation alongside operations has reduced their ability to scale for many teams.

That’s where AWS Lambdas can help. With a serverless-first strategy, your team can focus on customer needs instead of the underlying infrastructure that applications run on. This allows you to be more agile as a team without sacrificing performance or security – and lower your cloud costs.

Serverless functions do everything on-demand — starting when needed and stopping upon completion. Certain events you configure (like a file upload or API request) trigger the serverless function to start. When the action finishes, the server returns to an idle state until another trigger event occurs.

Because you only pay for the short time that the cloud service provider is executing the action, you’re able to save money — in the absence of provisioned concurrency (which I’ll talk about more shortly) when the server is idle, and you don’t incur charges. This means you can more easily scale your application to adjust to spikes in traffic and concurrent users without negatively impacting performance.

Serverless development puts the onus of management and provisioning onto the cloud service provider, giving your team the time to focus on development and improving speed and quality — which is especially important for lean development teams.

While the cost savings, time savings, and scalability serverless provides are attractive, serverless environments come with their own sets of challenges, such as cold starts and distributed tracing.

Cold starts are a common concern when it comes to serverless development. Because the server is idle except for when a request is made, or a function is called, spinning up a new microcontainer happens from scratch — which can cause delays. Those extra milliseconds can add up and ultimately negatively affect your overall application performance and user experience.

Distributed tracing is also a complicated side effect of serverless. Pinpointing the source of an error, clarifying how data is flowing in the application, and identifying ways to improve application performance are all critical factors. With so many small, standalone applications, it’s nearly impossible to practice tracing and identify where an issue began. And when you’re working with disparate cloud services and hundreds of Lambdas, you’ll need to invest in special tools to have any chance of effective tracking.

There are a few ways to get around cold starts.

The first option, which I don’t recommend, is to keep functions “warm” to ensure they are ready for immediate use when called. Warm-ups are achieved by using custom code or a third-party solution to provide dummy calls. While this may help speed your application, implementing and controlling it can be tricky. You have to add additional code to each function — and the additional calls can make logging unnecessarily cumbersome.

AWS launched provisioned concurrency in response to the limitations that warm-ups present. Instead of using custom code or adopting a third-party solution, AWS lets you configure warm instances without the need for code changes. You have to identify how many provisioned instances a particular function should have, and AWS will ensure that those instances are warm and ready for action.

Seems simple enough, right? Spoiler alert: provisioned concurrency also has downsides.

Because it is no longer usage-based, you can anticipate costs rising significantly. Much of the cost-effectiveness gained from adopting a serverless approach will be negated in provisioned concurrency charges.Provisioned concurrency is AWS’s solution to cold starts, but alone it still falls short of unlocking serverless’ full benefits.

With Sedai, you can still get all the benefits of serverless with provisioned concurrency when it’s intelligently managed for you. Sedai will adjust based on your seasonality, dependencies, traffic, and anything else it is seeing in the platform. Sedai will make sure if you are taking an operation, your rest of the dependencies aren’t negatively impacted.

The platform independently acts on your behalf to:

While infrastructure management has some challenges, Sedai can help overcome these. A serverless-first strategy is proven to increase team agility, and with Sedai your team can confidently take full advantage of serverless benefits.

Choosing serverless means choosing innovation, and in today’s fast-paced industry, that’s the only way to get ahead. Leave the infrastructure management to your cloud provider and free up your team’s time to focus on the application and deliver value.

Join our Slack community to learn more and start using Sedai for free today.