Ready to cut your cloud cost in to cut your cloud cost in half .

Attend a Live Product Tour to see Sedai in action.

Register now

November 6, 2025

November 6, 2025

November 6, 2025

November 6, 2025

In the past two weeks, the world was hit by two massive cloud outages:

The result was about half a billion dollars in potential damages from the AWS outage alone. That’s left a lot of tough questions for engineering leaders about how to prepare for the next big one.

Sedai had a front row seat to observe both outages, since our platform manages & optimizes the cloud at many large enterprises. In fact, before the world learned about these events, our technology detected the early signs in our customers’ environments.

In this blog, we’ll quickly cover:

As the cloud becomes more and more complex, experts who weighed in on the outages all agreed on one thing: There are more outages coming.

For AWS, the primary culprit was a DNS issue. After increased error rates and latencies were reported for AWS services in the US-EAST-1 Region, AWS’s incident summary confirmed the massive outage was “triggered by a latent defect within the service’s automated DNS management system.” This defect “caused endpoint resolution failures for DynamoDB.”

From there, a cascading effect of failures hit 70,000 companies, including over 2,000 large enterprises.

What began with the DynamoDB endpoint failure soon spread. The outage’s blast radius hit EC2’s internal launch workflow, NLB health checks, & other services downstream, letting the outage languish for 15 hours.

One week later, Azure faced its own outage due to an "inadvertent configuration change” to the Azure infrastructure. Again, causing a DNS issue.

Microsoft reported that services using Azure Front Door “may have experienced latencies, timeouts, and errors.” It sequentially rolled back to the “last known good configuration,” continued to recover nodes, rerouted traffic through healthy nodes, and blocked customers from making configuration changes.

More than a dozen Azure services went down, including Databricks, Azure Maps, and Azure Virtual. The cascading failures continued to reach Microsoft 365, Xbox, Minecraft, and beyond the Microsoft ecosystem, such as Alaska Airlines’ key systems and London’s Heathrow Airport’s website.

The global outage lasted for over 8 hours, peaking at 18,000 Azure users reporting issues and 20,000 Microsoft 365 Users.

Human-caused misconfigurations are inevitable. In fact, more than 80% of misconfigured resources are the result of human error.

And given the complexity and scale of the modern cloud, if AWS and Azure are at risk of massive outages, you are too.

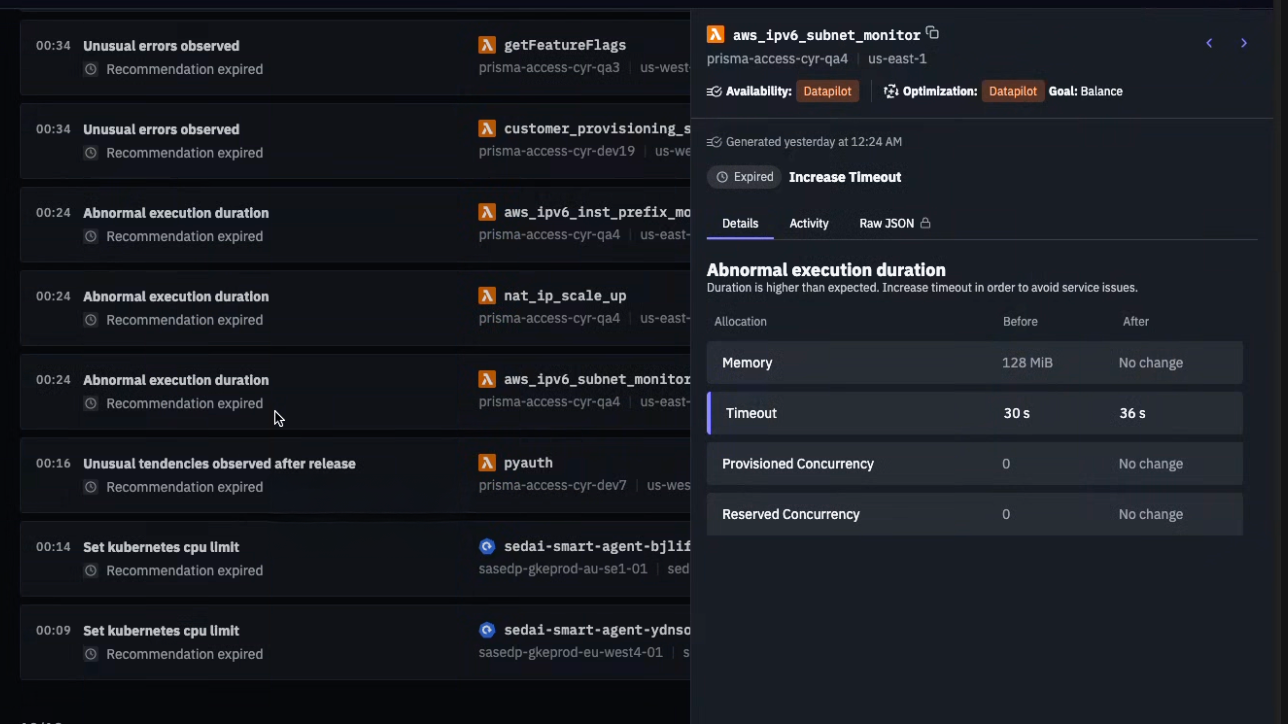

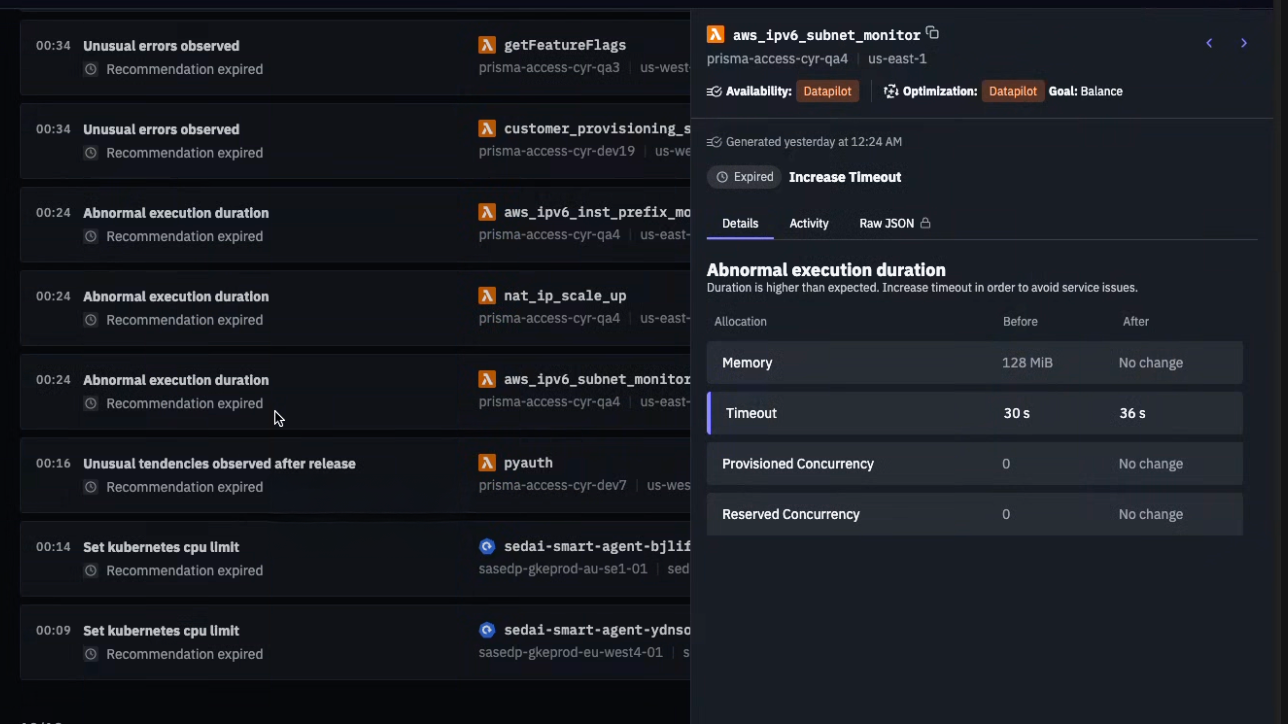

When AWS services started to experience problems, Sedai first picked up signals at 12:30 AM PDT, identifying an unusual increase in error rates across several applications for one of our customers. Meanwhile, AWS didn’t announce the outage until 1:26 a.m.

When the increased error rates came in, it triggered an alert signal, prompting the system to attempt corrective actions.

Sedai can’t bring a cloud service like AWS or Azure back online, but our technology plays a critical role in early detection, empowering customers to respond faster and with full context.

Traditional observability platforms rely on fixed thresholds and pre-set rules to detect availability issues. However, this approach can’t always determine when an individual application is not performing as expected.

Sedai, meanwhile, uses patented ML models to learn the normal behavior of each cloud-based application, without any thresholds or rules. That uniquely allows our platform to catch the early warning signs of outages — along with other performance issues that engineering teams can’t predict.

Sedai serves as a pivotal intelligence layer during outages. Our platform:

While Sedai can’t prevent AWS or Azure outages, it can autonomously prevent similar outages in our customer’s environments before they impact users. Ultimately, this kind of autonomous system has become necessary to handle the complexity of the cloud.

No doubt, the cloud will continue to experience disruptions. But as we move toward a future of more large-scale events (LSEs), like the AWS and Azure outages, companies and engineers must rely on autonomous systems to respond, in real time.

Along with implementing autonomous systems like Sedai, there are other key strategies we recommend implementing within your org to build a more resilient cloud. So that when an outage does happen, you’re as ready as possible.

Here are three strategies you can use to mitigate the blast radius of an outage or failure.

The backbone of resilience, redundancy saves you when you can’t isolate or recover from failures. It ensures there is no single point of failure.

How to build redundancy:

Automation, monitoring, and testing are the keys to remaining operationally resilient over time.

How to build operational resilience:

As these LSEs continue to grow in frequency and size, it’s more important than ever to adopt a multi-cloud strategy to avoid vendor lock-in, enhance reliability, and bolster compliance.

How to adopt a multi-cloud strategy:

Preparing for outages has become a core responsibility for every engineering leader. And in my experience, autonomous systems are now a necessary part of that job.

While current APMs detect increases in error rates across several applications when outages happen, our platform at Sedai is unique in not relying on rules & thresholds that don’t always catch the early symptoms. In short, the complexity of the cloud requires a deeper level of intelligence.

The scale of modern computing exceeds our limits as human beings, and yet we still expect engineers and SREs to configure & manage cloud resources themselves.

So as we push forward into the era of the all-encompassing and intricate cloud, it’s more important than ever that engineering teams shift to autonomous systems like Sedai. It’s time we don’t leave our engineering teams to fend for themselves in the face of increasing outages beyond their scope, and instead give them the tools that can identify and remediate issues. Autonomously.

November 6, 2025

November 6, 2025

In the past two weeks, the world was hit by two massive cloud outages:

The result was about half a billion dollars in potential damages from the AWS outage alone. That’s left a lot of tough questions for engineering leaders about how to prepare for the next big one.

Sedai had a front row seat to observe both outages, since our platform manages & optimizes the cloud at many large enterprises. In fact, before the world learned about these events, our technology detected the early signs in our customers’ environments.

In this blog, we’ll quickly cover:

As the cloud becomes more and more complex, experts who weighed in on the outages all agreed on one thing: There are more outages coming.

For AWS, the primary culprit was a DNS issue. After increased error rates and latencies were reported for AWS services in the US-EAST-1 Region, AWS’s incident summary confirmed the massive outage was “triggered by a latent defect within the service’s automated DNS management system.” This defect “caused endpoint resolution failures for DynamoDB.”

From there, a cascading effect of failures hit 70,000 companies, including over 2,000 large enterprises.

What began with the DynamoDB endpoint failure soon spread. The outage’s blast radius hit EC2’s internal launch workflow, NLB health checks, & other services downstream, letting the outage languish for 15 hours.

One week later, Azure faced its own outage due to an "inadvertent configuration change” to the Azure infrastructure. Again, causing a DNS issue.

Microsoft reported that services using Azure Front Door “may have experienced latencies, timeouts, and errors.” It sequentially rolled back to the “last known good configuration,” continued to recover nodes, rerouted traffic through healthy nodes, and blocked customers from making configuration changes.

More than a dozen Azure services went down, including Databricks, Azure Maps, and Azure Virtual. The cascading failures continued to reach Microsoft 365, Xbox, Minecraft, and beyond the Microsoft ecosystem, such as Alaska Airlines’ key systems and London’s Heathrow Airport’s website.

The global outage lasted for over 8 hours, peaking at 18,000 Azure users reporting issues and 20,000 Microsoft 365 Users.

Human-caused misconfigurations are inevitable. In fact, more than 80% of misconfigured resources are the result of human error.

And given the complexity and scale of the modern cloud, if AWS and Azure are at risk of massive outages, you are too.

When AWS services started to experience problems, Sedai first picked up signals at 12:30 AM PDT, identifying an unusual increase in error rates across several applications for one of our customers. Meanwhile, AWS didn’t announce the outage until 1:26 a.m.

When the increased error rates came in, it triggered an alert signal, prompting the system to attempt corrective actions.

Sedai can’t bring a cloud service like AWS or Azure back online, but our technology plays a critical role in early detection, empowering customers to respond faster and with full context.

Traditional observability platforms rely on fixed thresholds and pre-set rules to detect availability issues. However, this approach can’t always determine when an individual application is not performing as expected.

Sedai, meanwhile, uses patented ML models to learn the normal behavior of each cloud-based application, without any thresholds or rules. That uniquely allows our platform to catch the early warning signs of outages — along with other performance issues that engineering teams can’t predict.

Sedai serves as a pivotal intelligence layer during outages. Our platform:

While Sedai can’t prevent AWS or Azure outages, it can autonomously prevent similar outages in our customer’s environments before they impact users. Ultimately, this kind of autonomous system has become necessary to handle the complexity of the cloud.

No doubt, the cloud will continue to experience disruptions. But as we move toward a future of more large-scale events (LSEs), like the AWS and Azure outages, companies and engineers must rely on autonomous systems to respond, in real time.

Along with implementing autonomous systems like Sedai, there are other key strategies we recommend implementing within your org to build a more resilient cloud. So that when an outage does happen, you’re as ready as possible.

Here are three strategies you can use to mitigate the blast radius of an outage or failure.

The backbone of resilience, redundancy saves you when you can’t isolate or recover from failures. It ensures there is no single point of failure.

How to build redundancy:

Automation, monitoring, and testing are the keys to remaining operationally resilient over time.

How to build operational resilience:

As these LSEs continue to grow in frequency and size, it’s more important than ever to adopt a multi-cloud strategy to avoid vendor lock-in, enhance reliability, and bolster compliance.

How to adopt a multi-cloud strategy:

Preparing for outages has become a core responsibility for every engineering leader. And in my experience, autonomous systems are now a necessary part of that job.

While current APMs detect increases in error rates across several applications when outages happen, our platform at Sedai is unique in not relying on rules & thresholds that don’t always catch the early symptoms. In short, the complexity of the cloud requires a deeper level of intelligence.

The scale of modern computing exceeds our limits as human beings, and yet we still expect engineers and SREs to configure & manage cloud resources themselves.

So as we push forward into the era of the all-encompassing and intricate cloud, it’s more important than ever that engineering teams shift to autonomous systems like Sedai. It’s time we don’t leave our engineering teams to fend for themselves in the face of increasing outages beyond their scope, and instead give them the tools that can identify and remediate issues. Autonomously.